What do DMRs and EDDs have in common that pains us?

Locus Data Managers, Marian Carr and Jen Grippa, voice some common issues and frustrations with regulatory reporting.

Locus Data Managers, Marian Carr and Jen Grippa, voice some common issues and frustrations with regulatory reporting.

Becoming water positive is a more difficult task than becoming carbon positive. Both in practice and in tracking complex water data. Less than a decade ago, experts questioned if it was even feasible to have a net-positive impact when it comes to water. Perhaps the biggest reason for the difficulty with water is a relative volatility when compared with carbon. Seasonal environmental changes in rainfall, as well as droughts and floods, effectively make water consumption a non-zero-sum game. And with water, quality is more important than volume. Today, companies and organizations are believing that goal a more attainable one.

Organizations are now shooting for a goal that will create a net-positive impact on volume and quality. Recently, Microsoft announced their goal of becoming water positive by 2030. Their goal is not only impressive, but it is complex and multi-faceted. They plan to achieve more freshwater collection, lower consumption, working with various agencies and NGOs on regulatory changes, and perhaps most importantly digitizing their water data.

Why is this goal so important? Almost a third of the world’s population, over 2.2 billion individuals, lack access to safe and clean water. With potential chronic shortages becoming more common and increased demand being more likely, the need for fresh water will be more drastic as time goes on. Organizations aiming for water positivity will lessen the momentum of water becoming less available.

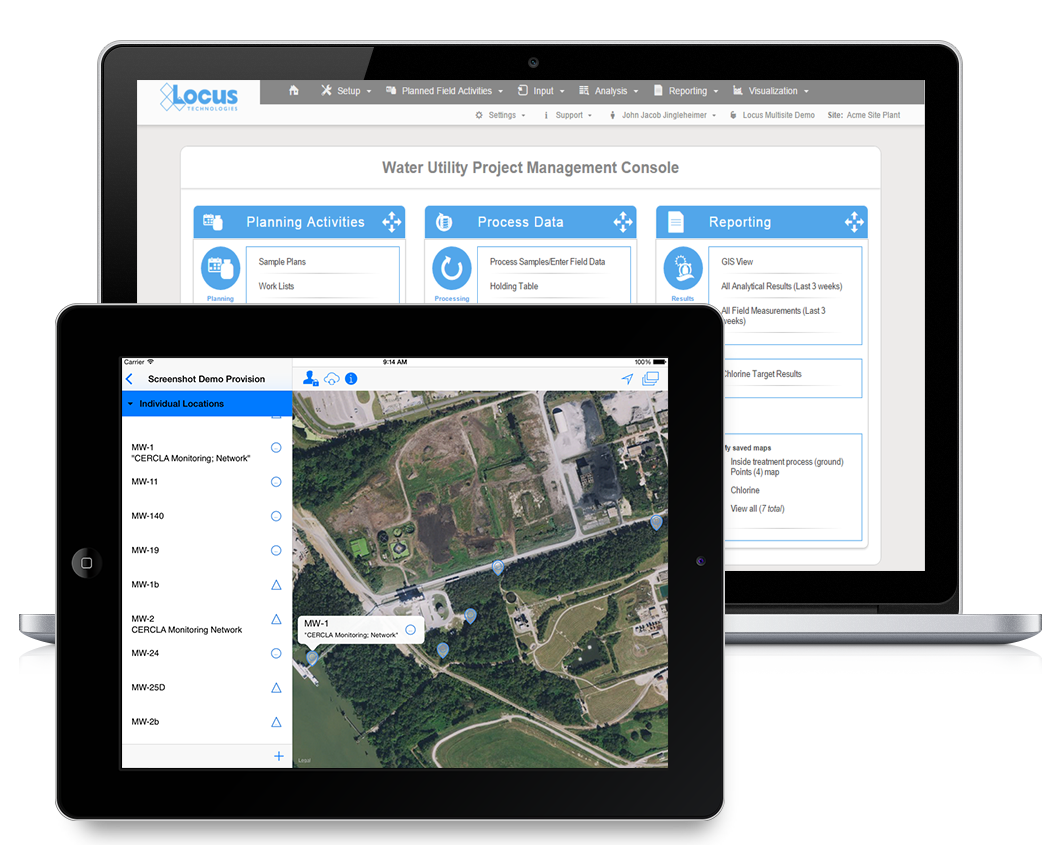

Where does Locus come in? We can’t solve a problem that we can’t understand. With Locus software, companies and organizations can accurately track and report complex ground and surface water data. Our calculation engine can deliver real-time estimates of supply and demand and our water quality software can manage sample planning and configure notifications for late or missing samples or exceedances in pre-defined limits. Our water quality solutions, long used by utilities like San Jose Water Company and Santa Clara Valley Water, can also help businesses achieve a greater perspective on their water consumption, providing the tools to allow them to become water positive.

Regardless of the size of your organization or the industry you’re in, chances are that right now artificial intelligence can benefit your EHS&S initiatives in one way or another. And whether you are ready for it or not, the age of artificial intelligence is coming. Forward-thinking and adaptive businesses are already using artificial intelligence in EHS&S as a competitive advantage in the marketplace to great success.

With modern EHS&S software, immense amounts of computing power, and seemingly endless cloud storage, you now have the tools to achieve fully-realized AI for your EHS&S program. And while you may not be ready to take the plunge into AI just yet, there are some steps you can take to implement artificial intelligence into your EHS&S program in the future.

Perhaps the best aspect of preparing for AI implementation is that all of the steps you take to properly bring about an AI system will benefit your program even before the deployment phase. Accurate sources, validated data, and one system of record are all important factors for any EHS&S team.

Used alongside big data, AI can quickly draw inferences and conclusions about many aspects of life more efficiently than with human analysis, but only if your sources pull accurate data. Accurate sources data will help your organization regardless of your current AI usage level. That’s why the first step to implementing artificial intelligence is auditing your data sources.

Sources pulling accurate data can be achieved with some common best practices. First, separate your data repository from the process that analyzes the data. This allows you to repeat the same analysis on different sets of data without the fear of not being able to replicate the process of analysis. AI requires taking a step away from an Excel-based or in-house software, and moving to a modern EHS&S software, like Locus Platform that will audit your data as it is entered. This means that anything from SCADA to historical outputs, samples, and calculations can be entered and vetted. Further, consider checking your data against other sources and doing exploratory analysis to greater legitimize your data.

AI requires data, and a lot of it—aggregated from multiple sources. But no amount of predictive analysis or machine learning is going to be worth anything without proper data validation processes.

Collected data must be relevant to the problem you are trying to solve. Therefore, you need validated data, which is a truly difficult ask with Excel, in-house platforms, and other EHS&S software. Appropriate inputs, appropriate ranges, data consistency, range checks (to name a few)—are all aspects of data that is validated in a modern EHS&S software like Locus Platform. Without these checks inherent to a platform, you cannot be sure that your data, or your analyses are producing useful or accurate results.

Possibly the best reason to get started with AI is the waterfall effect. As your data uncovers hidden insights and starts to learn on its own, the more accurate your new data will be and the better your predictions will become.

A unified system of record and a central repository for all data means that you see an immediate increase in data quality. Starting with AI means the end of disconnected EHS&S systems. No more transferring data from one platform to another or from pen and paper, just fully-digitized and mobile-enabled data in one platform backed up in the cloud. You also gain the added benefit of being able to access your data in real-time, incorporate compliance/reporting on the fly, and save time and resources using a scalable solution instead of a web of spreadsheets and ad-hoc databases.

Whether you are ready for AI or not, investing in these otherwise useful steps are necessary for any program looking to harness the power of artificial intelligence. When you are ready to take that next step, you will be well on the path to AI implementation, with a solid data infrastructure in place for your efforts.

To learn more about artificial intelligence, view this NAEM-hosted webinar led by Locus experts, or read our study on predicting water quality using machine learning.

More recently, big data has become more closely tied to IoT-generated streaming datasets such as Continued Air Emission Measurements (CEMS), real-time remote control and monitoring of treatment systems, water quality monitoring instrumentation, wireless sensors, and other types of wearable mobile devices. Add digitized historical records to this data streaming, and you end up with a deluge of data. (To learn more about big data and IoT trends in the EHS industry, please read this article: Keeping the Pulse on the Planet using Big Data.)

In the 1989 Hazardous Data Explosion article that I mentioned earlier, we first identified the limitation of relational database technology in interpreting data and the importance that IoT (automation as it was called at the time) and AI were going to play in the EHS industry. We wrote:

“It seems unavoidable that new or improved automated data processing techniques will be needed as the hazardous waste industry evolves. Automation (read IoT) can provide tools that help shorten the time it takes to obtain specific test results, extract the most significant findings, produce reports and display information graphically,”

We also claimed that “expert systems” (a piece of software programmed using artificial intelligence (AI) techniques. Such systems use databases of expert knowledge to offer advice or make decisions.) and AI could be possible solutions—technologies that have been a long time coming but still have a promising future in the context of big data.

“Currently used in other technical fields, expert systems employ methods of artificial intelligence for interpreting and processing large bodies of information.”

Although “expert systems” as a backbone for AI did not materialize as it was originally envisioned by researches, it was a necessary step that was needed to use big data to fulfil the purpose of an “expert”.

AI can be harnessed in a wide range of EHS compliance activities and situations to contribute to managing environmental impacts and climate change. Some examples of application include AI-infused permit management, AI-based permit interpretation and response to regulatory agencies, precision sampling, predicting natural attenuation of chemicals in water, managing sustainable supply chains, automating environmental monitoring and enforcement, and enhanced sampling and analysis based on real-time weather forecasts.

Parts one, three, and four of this blog series complete the overview of Big Data, IoT, AI and multi-tenancy. We look forward to feedback on our ideas and are interested in hearing where others see the future of AI in EHS software – contact us for more discussion or ideas!

On 12 April 2019, Locus’ Founder and CEO, Neno Duplan, received the prestigious Carnegie Mellon 2019 CEE (Civil and Environmental Engineering) Distinguished Alumni Award for outstanding accomplishments at Locus Technologies. In light of this recognition, Locus decided to dig into our blog vault, share a series of visionary blogs crafted by our Founder in 2016. These ideas are as timely and relevant today as they were three years ago, and hearken to his formative years at Carnegie Mellon, which formed the foundation for the current success of Locus Technologies as top innovator in the water and EHS compliance space.

It is funny how a single acronym can take you back in time. A few weeks ago when I watched 60 Minutes’ segment on AI (Artificial Intelligence) research conducted at Carnegie Mellon University, I was taken back to the time when I was a graduate student at CMU and a member of the AI research team for geotechnical engineering. Readers who missed this program on October 9, 2016, can access it online.

Fast forward thirty plus years and AI is finally ready for prime time television and a prominent place among the disruptive technologies that have so shaken our businesses and society. This 60 Minutes story prompted me to review the progress that has occurred in the field of AI technology, why it took so long to come to fruition, and the likely impact it will have in my field of environmental and sustainability management. I discuss these topics below. I also describe the steps that we at Locus have taken to put our customers in the position to capitalize on this exciting (but not that new) technology.

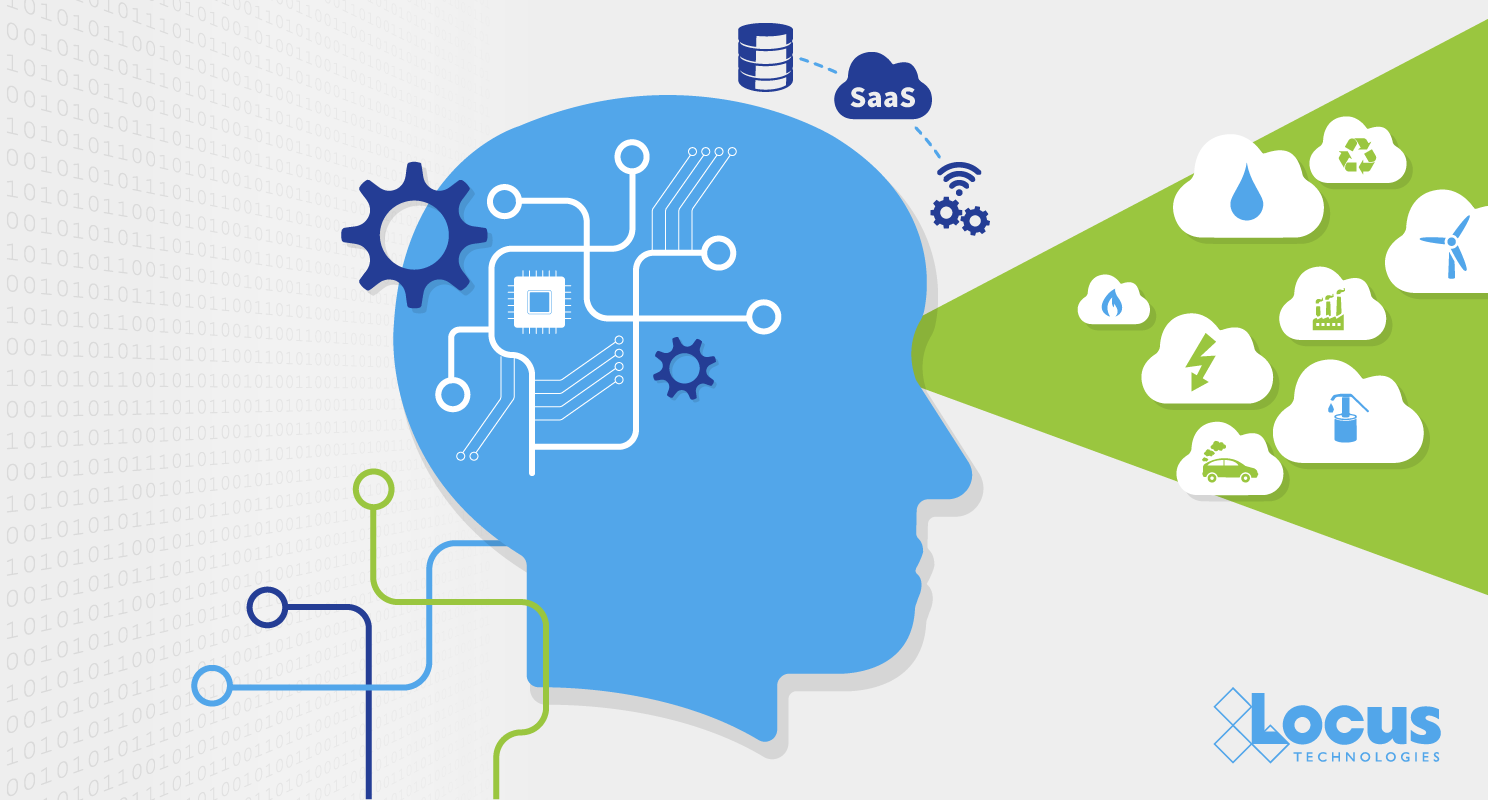

What I could not have predicted when I was at Carnegie Mellon is that AI was going to take a long time to mature–almost the full span of one’s professional career. The reasons for this are multiple, the main one being that several other technologies were absent or needed to mature before the promises of AI could be realized. These are now in place. Before I dive into AI and its potential impact on the EHS space, let me touch on these “other” major (disruptive) technologies without which AI would not be possible today: SaaS, Big Data, and IoT (Internet of Things).

As standalone technologies, each of these has brought about profound changes in both the corporate and consumer worlds. However, these impacts are small when compared to the impact all three of these will have when combined and interwoven with AI in the years to come. We are only in the earliest stages of the AI computing revolution that has been so long in the coming.

I have written extensively about SaaS, Big Data, and IoT over the last several decades. All these technologies have been an integral part of Locus’ SaaS offering for many years now, and they have proven their usefulness by rewarding Locus with contracts from major Fortune 500 companies and the US government. Let me quickly review these before I dive into AI (as AI without them is not a commercially viable technology).

Massive quantities of new information from monitoring devices, sensors, treatment systems controls and monitoring, and customer legacy databases are now pouring into companies EHS departments with few tools to analyze them on arrival. Some of the data is old information that is newly digitized, such as analytical chemistry records, but other information like streaming of monitoring wireless and wired sensor data is entirely new. At this point, most of these data streams are highly balkanized as most companies lack a single system of record to accommodate them. However, that is all about to change.

As a graduate student at Carnegie Mellon in the early eighties, I was involved with the exciting R&D project of architecting and building the first AI-based Expert System for subsurface site characterization, not an easy task even by today’s standards and technology. AI technology at the time was in its infancy, but we were able to build a prototype system for geotechnical site characterization, to provide advice on data interpretation and on inferring depositional geometry and engineering properties of subsurface geology with a limited amount of data points. The other components of the research included a relational database to store the site data, graphics to produce “alternative stratigraphic images” and network workstations to carry out the numerical and algorithmic processing. All of this transpired before the onset of the internet revolution and before any acronyms like SaaS, AI, or IoT had entered our vocabulary. This early research led to the development of a set of commercial tools and technological improvements and ultimately to the formation of Locus Technologies in 1997.

Part of this early research included management of big data, which is necessary for any AI undertaking. As a continuation of this work at Carnegie Mellon, Dr. Greg Buckle and I published an article in 1989 about the challenges of managing massive amounts of data generated from testing and long-term monitoring of environmental projects. This was at a time when spreadsheets and paper documents were king, and relational databases were little used for storing environmental data.

The article, “Hazardous Data Explosion,“ published in the December 1989 issue of the ASCE Civil Engineering Magazine, was among the first of its kind to discuss the upcoming Big Data boom within the environmental space and placed us securely at the forefront of the big data craze. This article was followed by a sequel article in the same magazine in 1992, titled “Taming Environmental Data,“ that described the first prototype solution to managing environmental data using relational database technology. In the intervening years, this prototype eventually became the basis of the industry’s first multi-tenant SaaS system for environmental information management.

Today, the term big data has become a staple across various industries to describe the enormity and complexity of datasets that need to be captured, stored, analyzed, visualized, and reported. Although the concept may have gained public popularity relatively recently, big data has been a formidable fixture in the EHS industry for decades. Initially, big data in EHS space was almost entirely associated with the results of analytical, geotechnical, and field testing of water, groundwater, soil, and air samples in the field and laboratory. Locus’ launch of its Internet-based Environmental Information Management (EIM) system in 1999 was intended to provide companies not only with a repository to store such data, but also with the means to upload such data into the cloud and the tools to analyze, organize, and report on these data.

In the future, companies that wish to remain competitive will have no choice but bring together their streams of (seemingly) unrelated and often siloed big data into systems such as EIM that allow them to evaluate and assess their environmental data with advanced analytics capabilities. Big data coupled with intelligent databases can offer real-time feedback for EHS compliance managers who can better track and offset company risks. Without the big data revolution, there would be no coming AI revolution.

There has been much talk about how artificial intelligence (AI) will affect various aspects of our lives, but little has been said to date about how the technology can help to make water quality management better. The recent growth in AI spells a big opportunity for water quality management. There is enormous potential for AI to be an essential tool for water management and decoupling water and climate change issues.

Two disruptive megatrends of digital transformation and decarbonization of economy could come together in the future. AI could make a significant dent in global greenhouse gas (GHG) emissions by merely providing better tools to manage water. The vast majority of energy consumption is wasted on water treatment and movement. AI can help optimize both.

AI is a collective term for technologies that can sense their environment, think, learn, and take action in response to what they’re detecting and their objectives. Applications can range from automation of routine tasks like sampling and analyses of water samples to augmenting human decision-making and beyond to automation of water treatment systems and discovery – vast amounts of data to spot, and act on patterns, which are beyond our current capabilities.

Applying AI in water resource prediction, management and monitoring can help to ameliorate the global water crisis by reducing or eliminating waste, as well as lowering costs and lessening environmental impacts.

Parts two, three, and four of this blog series complete the overview of Big Data, Iot, AI and multi-tenancy. We look forward to feedback on our ideas and are interested in hearing where others see the future of AI in EHS software – contact us for more discussion or ideas!

Blockchain is a highly disruptive technology that promises to change the world as we know it, much like the World Wide Web’s impact after its introduction in 1991. As companies look to the blockchain model to perform financial transactions, trade stocks, and create open market spaces, many other industries are looking at utilizing blockchain technology to eliminate the middleman. One sector well-positioned to benefit from blockchain technology is the data-intensive Environment, Health, Safety and Sustainability (EHS&S) space.

In particular, I see three major ways that the EHS industry can utilize blockchain technology to change how they manage information: 1) Blockchain-based IoT monitoring, 2) emissions management, and 3) emissions trading.

My belief is that blockchain technology will help to quantify the impact of man-made emissions on global warming trends and provide tools to manage it. One cannot manage what one cannot measure!

Imagine this: every emissions source in your company, whether to water, air, or soil, is connected wirelessly via a sensor or another device (thing) to a blockchain ledger that stores a description of the source, its location, emission factors, etc. Every time that the source generates emissions (that is, it is on), all necessary parameters are recorded in real time. If air emissions are involved, equivalent tons of carbon are calculated and recorded in a blockchain ledger and made available to reporting and trading entities in real time.

Blockchain ledgers may exist at many levels. Some may record emissions at a given site. Others at higher levels (company, state or province, country, continent, etc.) may roll up information from lower level ledgers.

Suppose that emissions are traded so that they are not yours anymore. In that case, someone else owns them, and you do not need to report them again, but everyone knows that you were the generating source. The same logic can be applied to tier 1, 2, and 3 level emissions. Attached to the emissions ledger are all other necessary information about the asset generating those emissions, financial information, depreciation schedule, time in service, operating time, fuel consumption, operators’ names, an estimate of future emissions—the list goes on.

To learn more how blockchain technology will impact emissions monitoring, management, reporting, and trading click here.

I recently reviewed an article published by Bessemer Venture Partners in 2012 titled “Bessemer’s Top 10 Laws of Cloud Computing”. I wanted to check how accurate their predictions on the Cloud computing were four years later. It is amazing how well they predicted market trends and how the 10 Laws of Cloud Computing still hold today. Nowhere is this more important than in my industry of environmental, health, and safety and sustainability software that is still struggling with a definition of cloud computing and hoping that their legacy system somehow will help them deal with avalanches of data stemming from EHS compliance and sustainability management activities. They will not. I repeat here an introductory paragraph from the original 10 Laws of Cloud Computing. The whole article can be viewed or downloaded from here.

“Although this is finally becoming more widely accepted as a best practice, we must still emphasize the importance of building a single instance, multi-tenant product, with a single version of code in production. “Just say no!” to on-premises deployments. Multi-instance, single tenant offerings should only apply to legacy software companies moving to a dedicated hosting model because they don’t have the luxury of an architectural redesign. Of course it is possible to use virtualization to provide multiple instances, but this hybrid strategy will make your engineering team much more expensive and much less nimble.”

“A large part of the momentum around Cloud Computing today is because IT departments now realize they can avoid many of (these) implementation headaches and functionality shortcomings, and instead get the best of both worlds by working with best-of-breed vendors. Cloud Computing provides the opportunity to leverage best-of-breed application offerings, with the standardization and pre-integration of many of the applications and APIs. You can pick the world’s best application for every need, every user, and every business case. You can deploy exactly the number of seats you need, where and when you need them.”

The Senate voted last December to approve a sweeping bipartisan chemical safety bill after years of work and months of tense negotiations.

The primary law overseeing the safety of chemical products—the Toxic Substances Control Act (TSCA)—was passed in 1976 and provides the U.S. Environmental Protection Agency (EPA) authority to review and regulate chemicals in commerce. TSCA was designed to ensure that products are safe for intended use. While the law created a robust system of regulations, over time, confidence in EPA’s regulation of chemicals has eroded.

The Frank R. Lautenberg Chemical Safety for the 21st Century Act, named after the late New Jersey senator, updates the 1976 TSCA to give the Environmental Protection Agency (EPA) broad new powers to study and regulate harmful chemicals like asbestos, while restricting states’ individual abilities to make their own rules. Lautenberg had made chemical reform his top priority before his death in 2013.

The bill would eventually require testing for every chemical currently in commerce, and any new chemicals. The EPA decisions about chemicals would have to be made solely on the basis of the impact on health and the environment, not the compliance costs.

But the legislation also has significant provisions that the chemical industry asked for, such as restrictions on what states can do on their own, which the industry said is essential for certainty and to avoid a patchwork of rules.

The House passed its own chemical reform bill in June 2015. The Senate sponsors said they plan to work with the House toward a compromise measure.

Key Points of the Bill

Some of the highlights, as outlined in a summary of the Senate bill, are:

Other provisions include minimizing animal testing, the establishment of a federal sustainable chemistry program, interim storage by mercury generators and a prohibition on the export of certain mercury compounds.

New EU rules to improve the monitoring of drinking water across Europe come into force, improving access to wholesome and clean drinking water in Europe. As a first step following the European Citizens’ Initiative Right2Water, new rules adopted by the European Commission today provide flexibility to Member States as to how drinking water quality is monitored in around 100,000 water supply zones in Europe. This will allow for more focused, risk-based monitoring, while ensuring full protection of public health.

This new monitoring and control system will allow member states to reduce unnecessary analyses and concentrate on controls that really matter. This amendment of the Drinking Water Directive is a response to calls by citizens and the European Parliament to adopt legislation ensuring a better, fair and comprehensive water supply. It allows for an improved implementation of EU rules by Member States as it removes unnecessary burdens. Member States can now decide, on the basis of a risk assessment, which parameter to monitor given that some drinking water supply zones do not pose any risk for finding hazardous substances. They can also choose to increase or reduce the frequency of sampling in water supply zones, as well as to extend the list of substances to monitor in case of public health concerns. Flexibility in the monitoring of parameters and the frequency of sampling is framed by a number of conditions to be met, to ensure protection of citizens’ health. The new rules follow the principle of ‘hazard analysis and critical control point’ (HACCP), already used in food hygiene legislation, and the water safety plan approach laid down in the World Health Organization’s (WHO) Guidelines for Drinking Water Quality. Member States have two years to apply the provisions of this new legislation.

In order to effectively manage sampling and monitoring data at over 100,000 water supply zones water utilities and other stakeholders will need access to software like Locus EIM Water to organize complex water quality management information in real time and automate laboratory management programs and reporting. Locus EIM has been in use by numerous water utilities in the United States.

A recent article in the Los Angeles Times discussed advances in environmental monitoring technologies. Rising calls to create cleaner air and limit climate change are driving a surge in new technology for measuring air emissions and other pollutants — a data revolution that is opening new windows into the micro-mechanics of environmental damage. Data stemming from these new monitoring technologies coupled with advances in data management (Big Data) and Internet of Things (IOT) as discussed in my article “Keeping the Pulse of the Planet: Using Big Data to Monitor Our Environment” published last year, is creating all new industry and bringing much needed transparency to environmental degradation. Real time monitoring of radioactive emissions at any point around globe or water quality data are slowly becoming a reality.

According to the article author William Yardley, “the momentum for new monitoring tools is rooted in increasingly stringent regulations, including California’s cap-and-trade program for greenhouse gas emissions, and newly tightened federal standards and programs to monitor drought and soil contamination. A variety of clean-tech companies have arisen to help industries meet the new requirements, but the new tools and data are also being created by academics, tinkerers and concerned citizens — just ask Volkswagen, whose deceptive efforts to skirt emissions-testing standards were discovered with the help of a small university lab in West Virginia.”

“Taking it all into account, the Earth is coming under an unprecedented new level of scrutiny.”

“There are a lot of companies picking up on this, but who is interested in the data — to me, that’s also fascinating,” said Colette Heald, an atmospheric chemist at the Massachusetts Institute of Technology. “We’re in this moment of a huge growth in curiosity — of people trying to understand their environment. That coincides with the technology to do something more.”

The push is not limited to measuring air and emissions. Tools to sample soil, air emissions, produced water, waste management, monitor water quality, test ocean acidity and improve weather forecasting are all on the rise. Drought has prompted new efforts to map groundwater and stream flows and their water quality across the West.

Two of key issues that need to be addressed are validity of data stemming from new instruments and sensors for enforcement purposes and where is all (big) data be stored and how accessible it will be. The first question will be answered as new hand-held data collection instrumentation, sensors, and devices undergo testing and accreditation by governmental agencies. The second issue, a big data, has already been solved by companies like Locus Technologies that has been aggregating massive amounts of environmental monitoring data in its cloud-based EIM (Environmental Information Management) software.

As the article put it: “When the technology is out there and everyone starts using it, the question is, how good is the data? If the data’s not high enough quality, then we’re not going to make regulatory decisions based on that. Where is this data going to reside in 10 years, when all these sensors are out there, and who’s going to [manage] that information? Right now it’s kind of organic so there’s no centralized place where all of this information is going.”

However, the private industry and some Government organizations like Department of Energy (DOE) are already preparing for these new avalanches of data that are hitting their corporate networks and are using Locus cloud to organize and report increased volume of monitoring information stemming from their facilities and other monitoring networks.

SAN FRANCISCO, Calif., Dec. 20, 2023 — Locus Technologies, (Locus), a trailblazer in cloud computing enterprise software for environmental, energy, air, ESG (Environmental, Social, and Governance), sustainability, water, and compliance management, proudly announces the successful completion of the System and Organization Controls (SOC) 1SM and 2SM examinations. These examinations reaffirm Locus’s unwavering dedication to maintaining exceptional standards in financial reporting management and overall system integrity, setting it apart from its competitors.

Conducted by A-Lign, a respected CPA firm, the comprehensive examination has once again validated Locus Technologies’ unparalleled commitment to stringent industry standards. The resulting CPA report attests to the company’s relentless focus on robust controls and procedures, ensuring the security, availability, processing integrity, confidentiality, and privacy of its Software as a Service (SaaS) system, placing it at the forefront of data protection in the industry.

Neno Duplan, Founder and CEO at Locus Technologies, expressed pride in the company’s continued strides in data security, stating, ‘Our successful compliance renewal further solidifies our position as an industry leader, showcasing our commitment to safeguarding our clients’ invaluable data. In our 27-year history as SaaS company we had no breaches of customer data’.

Locus’ consistent possession of these certifications since 2012 signifies the company’s steadfast dedication to excellence and its role as a standard-bearer for EHS Compliance and ESG software in providing a secure, dependable, and market-tested SaaS platform.

The recent examination by A-Lign not only reaffirms Locus Technologies’ position along with its Amazon Web Services (AWS) partner as an industry leader in cloud-based enterprise software but also underscores the company’s proactive approach to ensuring the highest levels of service quality and data protection for its global clientele, positioning it far ahead of its competitors.

Customers can remain assured of Locus’ unwavering commitment to delivering superior SaaS solutions and maintaining the security and reliability of customer data. For a company to receive SOC certification, it must have sufficient policies and strategies that satisfactorily protect customer’s data. SOC 1and SOC 2 certifications all require a service organization to display controls regulating their interaction with customers and customer data.

Locus leads the industry in providing cloud computing enterprise software for environmental, energy, air, ESG, sustainability, water, and compliance management. The company’s dedication to technological advancement and unparalleled data security ensures it delivers cutting-edge solutions that surpass industry standards and positions it as an industry leader in setting new benchmarks for safeguarding customer data.

About Locus Technologies

Locus gives businesses the power to be green on demand and has pioneered web-based environmental software suites. Locus software enables companies to organize and validate all critical environmental information in a single system, which includes analytical data for water, air, soil, greenhouse gases, sustainability, compliance, and environmental content. Locus software is delivered through Cloud computing (SaaS), so there is no hardware to procure, no hefty up-front license fee, and no complex set-ups. Locus also offers services to help implement and maintain environmental programs using our unique technologies.

For further information regarding Locus Technologies and its commitment to excellence in SaaS solutions, please visit www.locustec.com or email info@locustec.com.

Neno Duplan is founder and CEO of Locus Technologies, a Silicon Valley-based environmental software company founded in 1997. Locus evolved from his work as a research associate at Carnegie Mellon in the 1980s, where he developed the first prototype system for environmental information management. This early work led to the development of numerous databases at some of the nation’s largest environmental sites, and ultimately, to the formation of Locus in 1997.

Mr. Duplan recently sat down with Environmental Business Journal to discuss a myriad of topics relating to technology in the environmental industry such as Artificial Intelligence, Blockchain, Multi-tenancy, IoT, and much more.

Click here to learn more and purchase the full EBJ Vol XXXIII No 5&6: Environmental Industry Outlook 2020-2021

Locus is mentioned in ENR’s article about data mining, discussing how Locus software helps our long-time customer, Los Alamos National Laboratory, manage their environmental compliance and monitoring.

Big data has become a major buzzword in tech these days; the ability to gather, store and aggregate information about individuals has exploded in the last few years.

299 Fairchild Drive

Mountain View, CA 94043

P: +1 (650) 960-1640

F: +1 (415) 360-5889

Locus Technologies provides cloud-based environmental software and mobile solutions for EHS, sustainability management, GHG reporting, water quality management, risk management, and analytical, geologic, and ecologic environmental data management.