Centralized Environmental Data: Lessons Learned from Electronic Health Record Software

Consider the potential for AI to predict trends pertaining to personal health and planet health and to identify life-saving correlations.

Consider the potential for AI to predict trends pertaining to personal health and planet health and to identify life-saving correlations.

Over the longer term, we envision a world where we can use shared environmental data to take a more concerted approach to our collective environmental stewardship. We consider the work at Locus an essential step in addressing a monumental global problem.

The conversation about the environmental landscape has evolved drastically over the last 50 years as we continue to understand how human activity has affected the planet. The 21st century’s environmental challenges are manifold as shortages of drinkable water; the impact of various pollutants that enter our atmosphere, including greenhouse gas emissions, wastewater, radioactive materials, and other hazardous materials; the strains of our ever-increasing population on limited resources, and threatened ecosystems; and climate change causing extreme weather conditions to push more and more of the population into a precarious situation.

Companies and society need a collective and holistic understanding of the problems we face. The only way to understand the whole picture and act meaningfully on a global level is for all companies to understand the impact of their activities. It’s impossible to mitigate the risks and effects of those activities on the planet when we do not have the data to characterize the problem and see a complete picture of what we face.

This whole picture will require us to monitor an unprecedented quantity of data, and with this massive data explosion, Locus’s current efforts come into play. We are working to ensure that we are prepared for this ever-increasing data tsunami by laying the groundwork with the companies and government agencies Locus works with daily. They are already tracking increased data, analyzing their activities, finding ways to operate more efficiently, producing fewer emissions and less wastewater, and improving their environmental footprints. Locus would like to see the scale of those mitigation efforts increase a thousand-fold over the next ten years and for our efforts to yield clear improvements in our collective environmental impacts.

While someday we may have environmental data sharing among all public and private organizations, the regulatory bodies that govern them, and the scientific community, which will provide us with an even more complete picture of our environmental activities, any coordinated effort is years in the making. In the meantime, Locus ensures we are ready to help tackle the problem.

This is the final post highlighting the evolution of Locus Technologies over the past 25 years. The previous post can be found here.

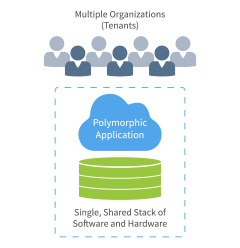

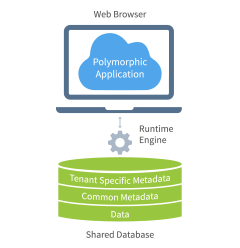

Locus Platform is the preeminent on-demand application development platform for EHS, ESG, and beyond, supporting many organizations and government institutions. Individual enterprises and governmental organizations trust Locus’s SaaS Platform to deliver robust, reliable, Internet-scale applications. The foundation of Locus Platform (LP) is a metadata-driven software architecture that enables multitenant applications. This unique technology, a significant differentiator between Locus and its competitors, makes the Locus Platform fast, scalable, and secure for any application. What do we mean by metadata-driven? If you look up metadata-driven development on the web, you find the following:

“The metadata-driven model for building applications allows an Enterprise to deploy multiple applications on the same hosting infrastructure easily. Since multiple applications share the same Designer and Rendering Engine, the only difference is the metadata created uniquely for each application.”

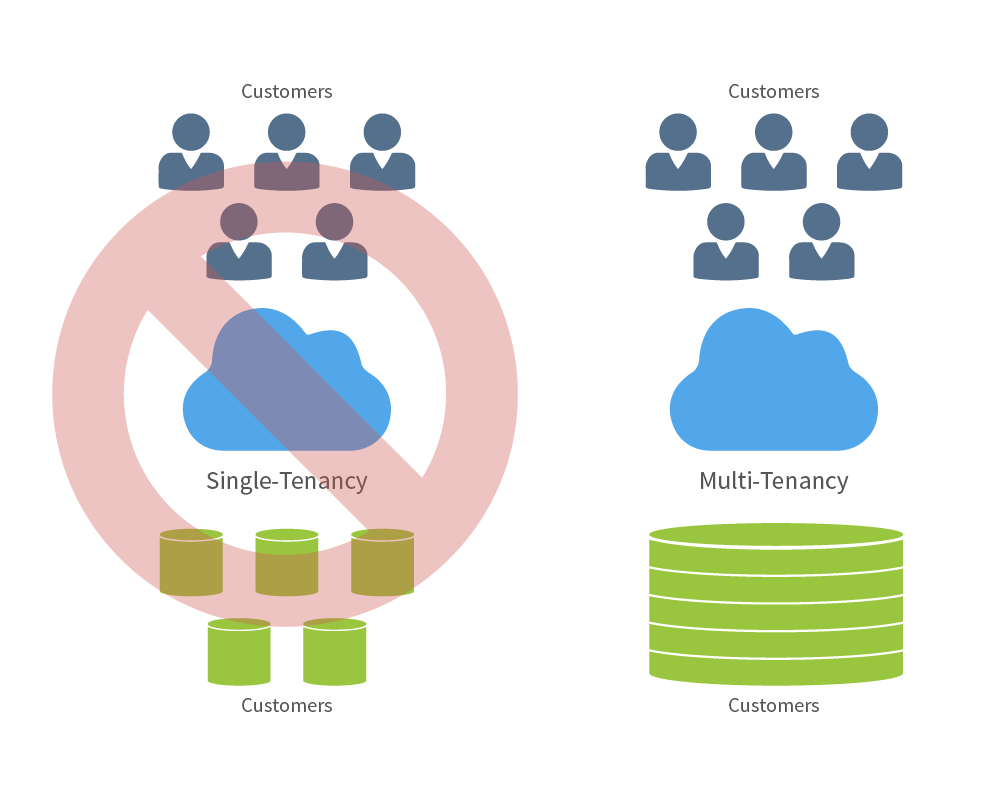

The Triumph of the Multitenant SaaS model, which Locus brings to the EHS/ESG industry.

In the case of LP, it is the Designer and Rendering Engine cited in this definition. All LP customers share this engine and use it to create their custom applications. These applications may consist of dashboards, forms to enter data, plots, reports, and so forth, all designed to meet a set of requirements. Instructions (metadata) stored in a database tell the engine how to build these entities, the total of which form a client-designed application.

Locus Platform’s evolution to the leading EHS and ESG Platform.

History has shown that every so often, incremental advances in technology and changes in business models create significant paradigm shifts in the way software applications are designed, built, and delivered to end-users. The invention of personal computers (PCs), computer networking, and graphical user interfaces (UIs) gave rise to the adoption of client/server applications over expensive, inflexible, character-mode mainframe applications. And today, reliable broadband Internet access, service-oriented architectures (SOAs), and the cost inefficiencies of managing dedicated on-premises applications are driving a transition toward the delivery of decomposable, collected, shared, Web-based services called software as a service (SaaS).

With every paradigm shift comes a new set of technical challenges, and SaaS is no different. Existing application frameworks are not designed to address the unique needs of SaaS. This void has given rise to another new paradigm shift, namely platform as a service (PaaS). Hosted application platforms are managed environments specifically designed to meet the unique challenges of building SaaS applications and deliver them more cost-efficiently.

The focus of Locus Platform is multitenancy, a fundamental design approach that dramatically improves the manageability of EHS and ESG SaaS applications. Locus Platform is the world’s first PaaS built from scratch to take advantage of the latest software developments for building EHS, ESG, sustainability, and other applications. Locus Platform delivers turnkey multitenancy for Internet-scale applications.

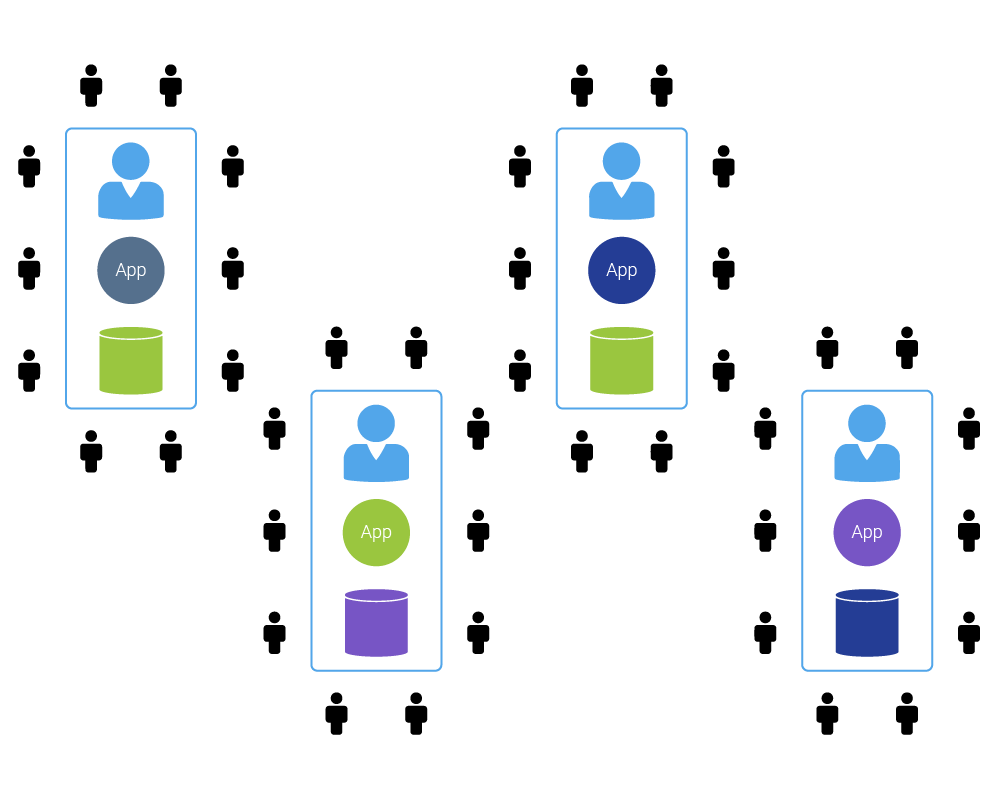

A single shared software and hardware stack across all customers.

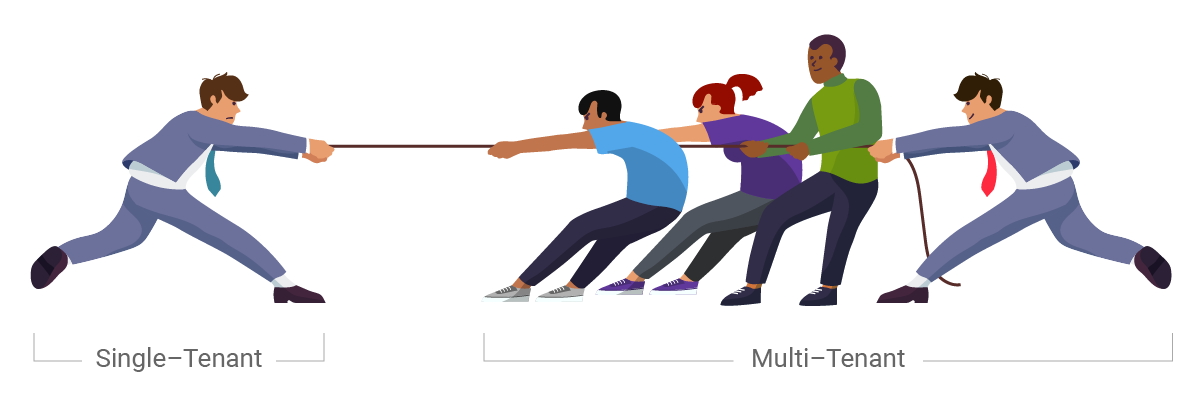

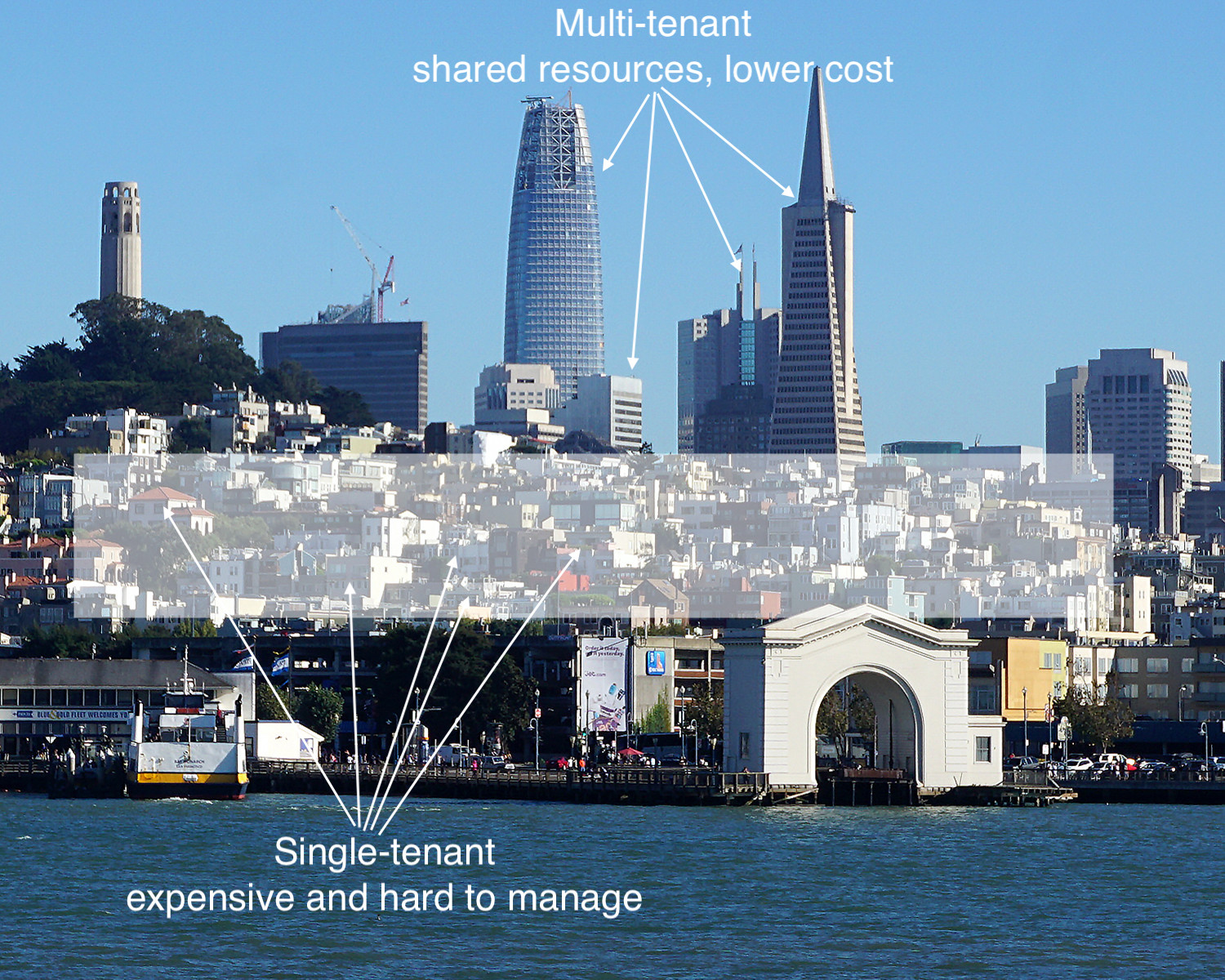

The same applies to many different sets of users; all Locus’ LP applications are multitenant rather than single-tenant. Whereas a traditional single-tenant application requires a dedicated group of resources to fulfill the needs of just one organization, a multitenant application can satisfy the needs of multiple tenants (companies or departments within a company, etc.) using the hardware resources and staff needed to manage just a single software instance. A multitenant application cost-efficiently shares a single stack of resources to satisfy the needs of multiple organizations.

Single-tenant apps are expensive for the vendor and the customer.

Tenants using a multitenant service operate in virtual isolation: Organizations can use and customize an application as though they each have a separate instance. Yet, their data and customizations remain secure and insulated from the activity of all other tenants. The single application instance effectively morphs at runtime for any particular tenant at any given time.

Single-tenant apps create waste

Multitenancy is an architectural approach that pays dividends to application providers (Locus) and users (Locus customers). Operating just one application instance for multiple organizations yields tremendous economy of scale for the provider. Only one set of hardware resources is necessary to meet the needs of all users, a relatively small, experienced administrative staff can efficiently manage only one stack of software and hardware, and developers can build and support a single code base on just one platform (operating system, database, etc.) rather than many. The economics afforded by multitenancy allows the application provider to, in turn, offer the service at a lower cost to customers—everyone involved wins.

Some attractive side benefits of multitenancy are improved quality, user satisfaction, and customer retention. Unlike single-tenant applications, which are isolated silos deployed outside the reach of the application provider, a multitenant application is one large community that the provider itself hosts. This design shift lets the provider gather operational information from the collective user population (which queries respond slowly, what errors happen, etc.) and make frequent, incremental improvements to the service that benefits the entire user community at once.

Two additional benefits of a multitenant platform-based approach are collaboration and integration. Because all users run all applications in one space, it is easy to allow any user of any application varied access to specific data sets. This capability simplifies the effort necessary to integrate related applications and the data they manage.

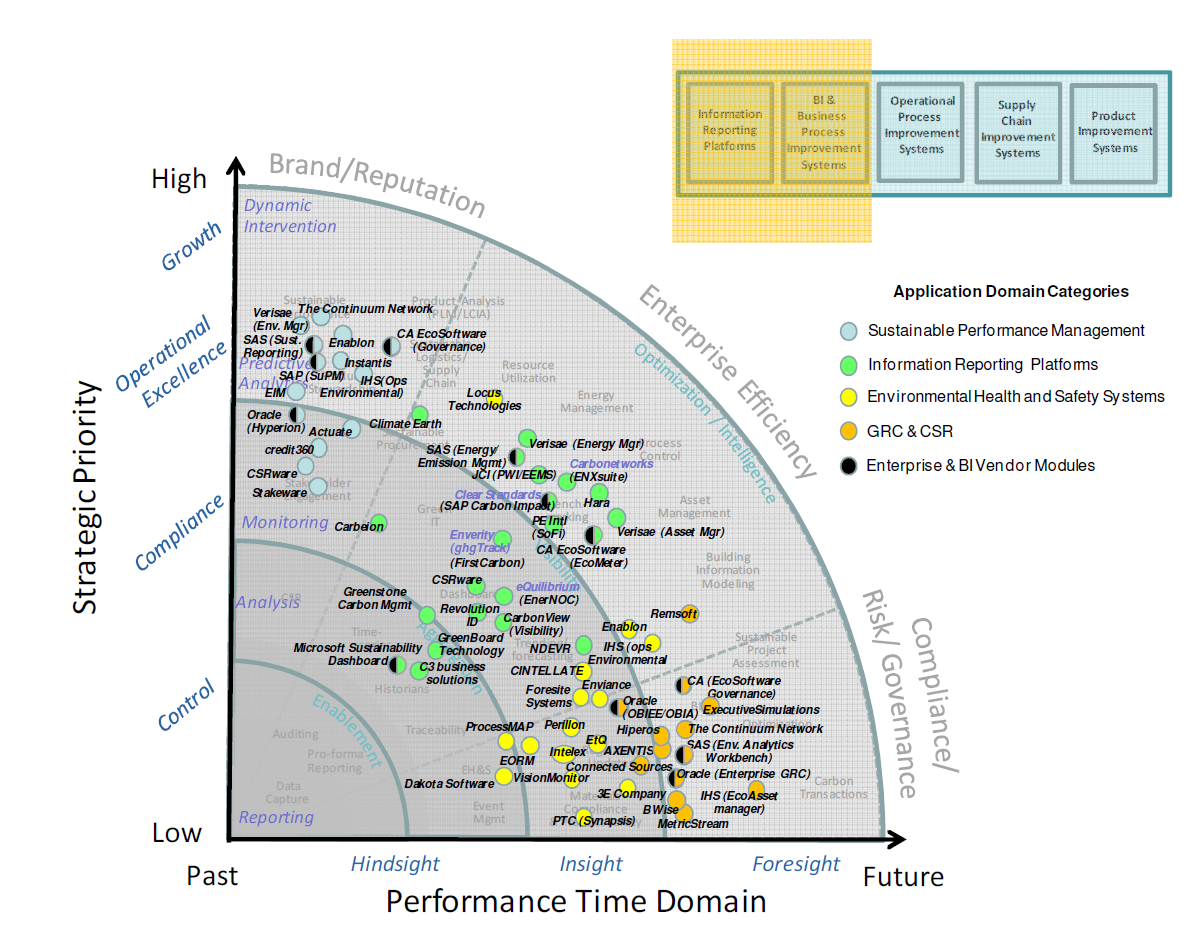

Gartner recognized the power of the Locus Platform in their early research.

This is the third post highlighting the evolution of Locus Technologies over the past 25 years. The first two can be found here and here. This series continues with Locus at 25 Years: How did we fund Locus?

How did Locus succeed in deploying Internet-based products and services in the environmental data sector? After several years of building and testing its first web-based systems (EIM) in the late 1990s, Locus began to market its product to organizations seeking to replace their home-grown and silo systems with a more centralized, user-friendly approach. Such companies were typically looking for strategies that eliminated their need to deploy hated and costly version updates while at the same time improving data access and delivering significant savings.

Several companies immediately saw the benefit of EIM and became early adopters of Locus’s innovative technology. Most of these companies still use EIM and are close to their 20th anniversary as a Locus client. For many years after these early adoptions, Locus enjoyed steady but not explosive growth in EIM usage.

E. M. Roger’s Diffusion of Innovation (DOI) Theory has much to offer in explaining the pattern of growth in EIM’s adoption. In the early years of innovative and disruptive technology, a few companies are what he labels innovators and early adopters. These are ones, small in number, that are willing to take a risk, that is aware of the need to make a change, and that are comfortable in adopting innovative ideas. The vast majority, according to Rogers, do not fall into one of these categories. Instead, they fall into one of the following groups: early majority, late majority, and laggards. As the adoption rate grows, there is a point at which innovation reaches critical mass. In his 1991 book “Crossing the Chasm,” Geoffrey Moore theorizes that this point lies at the boundary between the early adopters and the early majority. This tipping point between niche appeal and mass (self-sustained) adoption is simply known as “the chasm.”

Rogers identifies the following factors that influence the adoption of an innovation:

In its early years of marketing EIM, some of these factors probably considered whether EIM was accepted or not by potential clients. Our early adopters were fed up with their data stored in various incompatible silo systems to which only a few had access. They appreciated EIM’s organization, the lack of need to manage updates, and the ability to test the design on the web using a demonstration database that Locus had set up. When no sale could be made, other factors not listed by Rogers or Moore were often involved. In several cases, organizations looking to replace their environmental software had budgets for the initial purchase or licensing of a system but had insufficient monies allocated for recurring costs, as with Locus’s subscription model. One such client was so enamored with EIM that it asked if it could have the system for free after the first year. Another hurdle that Locus came up against was the unwillingness of clients at the user level to adopt an approach that could eliminate their co-workers’ jobs in their IT departments. But the most significant barriers that Locus came up against revolved around organizations’ security concerns regarding the placement of their data in the cloud.

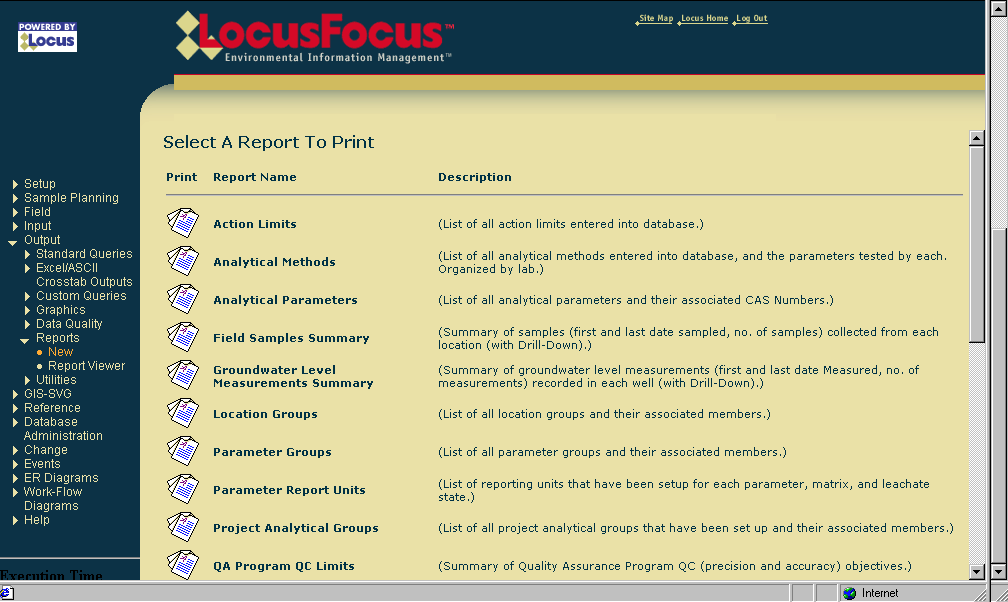

One of the earliest versions of EIM

Oh, how so much has changed in the intervening years! The RFPs that Locus receives these days explicitly call out for a web-based system or, much less often, express no preference for a web-based or client-server system. We believe this change in attitudes toward SaaS applications has many root causes. Individuals now routinely do their banking over the web. They store their files in Dropbox and their photos on sites like Google Photos or Apple and Amazon Clouds. They freely allow vendors to store their credit card information in the cloud to avoid entering this information anew every time they visit a site. No one who keeps track of developments in the IT world can be oblivious to the explosive growth of Amazon Web Services (AWS), Salesforce, and Microsoft’s Azure. We believe most people now have more faith in the storage and backup of their files on the web than if they were to assume these tasks independently.

An early update to EIM software

Changes have also occurred in the attitudes of IT departments. The adoption of SaaS applications removes the need to perform system updates or the installation of new versions on local computers. Instead, for systems like EIM, updates only need to be completed by the vendor, and these take place at off-hours or at announced times. This saves money and eliminates headaches. A particularly nasty aspect of local, client-server systems is the often experienced nightmare when installing an updated version of one application causes failures in others that are called by this application. None of these problems typically occur with SaaS applications. In the case of EIM, all third-party applications used by it run in the cloud and are well tested by Locus before these updates go live.

Locus EIM continues to become more streamlined and user friendly over the years.

Yet another factor has driven potential clients in the direction of SaaS applications, namely, search. Initially, Locus was primarily focused on developing software tools for environmental cleanups, monitoring, and mitigation efforts. Such efforts typically involved (1) tracking vast amounts of data to demonstrate progress in the cleanup of dangerous substances at a site and (2) the increased automation of data checking and reporting to regulatory agencies.

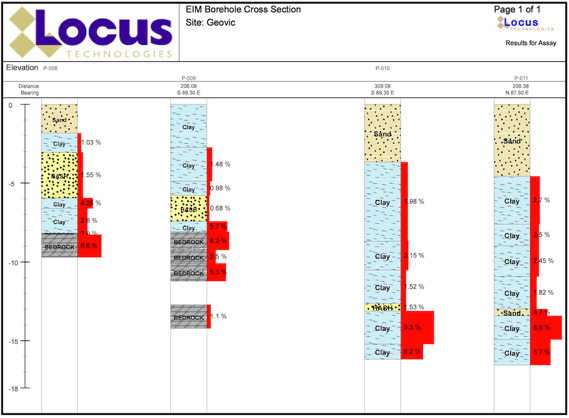

Locus EIM handles all types of environmental data.

Before systems like EIM were introduced, most data tracking relied on inefficient spreadsheets and other manual processes. Once a mitigation project was completed, the data collected by the investigative and remediation firms remained scattered and stored in their files, spreadsheets, or local databases. In essence, the data was buried away and was not used or available to assess the impacts of future mitigation efforts and activities or to reduce ongoing operational costs. Potential opportunities to avoid additional sampling and collection of similar data were likely hidden amongst these early data “storehouses,” yet few were aware of this. The result was that no data mining was taking place or possible.

Locus EIM in 2022

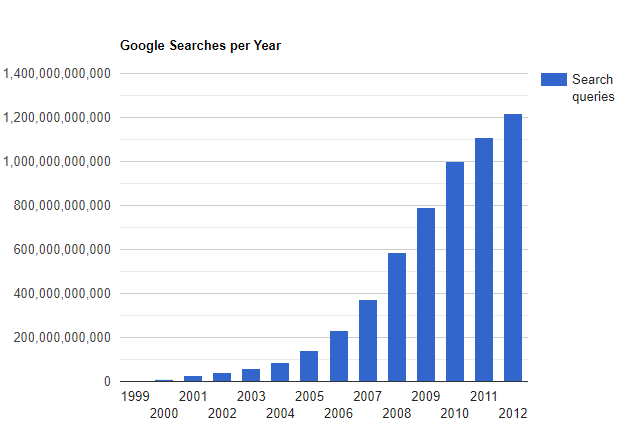

The early development of EIM took place while searches on Google were relatively infrequent (see years 1999-2003 below). Currently, Google processes 3.5 billion searches a day and 1.2 trillion searches per year. Before web-based searches became possible, companies that hired consulting firms to manage their environmental data had to submit a request such as “Tell me the historical concentrations of Benzene from 1990 to the most recent sampling date in Wells MW-1 through MW-10.” An employee at the firm would then have to locate and review a report or spreadsheet or perform a search for the requested data if the firm had its database. The results would then be transmitted to the company in some manner. Such a request need not necessarily come from the company but perhaps from another consulting firm with unique expertise. These search and retrieval activities translated into prohibitive costs and delays for the company that owned the site.

Google Searches by Year

Over the last few decades, everyone has become dependent on and addicted to web searching. Site managers expect to be able to perform their searches, but honestly, these are less frequent than we would have expected. What has changed are managers’ expectations. They hope to get responses to requests like those we have imagined above in a matter of minutes or hours, not days. They may not even expect a bill for such work. The bottom line is that the power of search on the web predisposes many companies to prefer to store their data in the cloud rather than on a spreadsheet or in their consultant’s local, inaccessible system.

The world has changed since EIM was first deployed, and as such, many more applications are now on the path, that Locus embarked on some 20 years ago. Today, Locus is the world leader in managing on-demand environmental information. Few potential customers question the merits of Locus’s approach and its built systems. In short, the software world has caught up with Locus. EIM and LP have revolutionized how environmental data is stored, accessed, managed, and reported. Locus’ SaaS applications have long been ahead of the curve in helping private, and public organizations manage their environmental data and turn their environmental data management into a competitive advantage in their operating models.

We refer to the competitive advantages of improved data quality and flow and lower operating costs. EIM’s Electronic Data Deliverable (EDD) module allows for the upload of thousands of laboratory results in a few minutes. Over 60 automated checks are performed on each reported result. Comprehensive studies conducted by two of our larger clients show savings in the millions gained from the adoption of EIM’s electronic data verification and validation modules and the ability of labs to load their EDDs directly into a staging area in the system. The use of such tools reduces much of the tedium of manual data checking and, at the same time, results both in the elimination of manually introduced errors and the reduction of throughput times (from sampling to data reporting and analysis). In short, the adoption of our systems has become a win-win for companies and their data managers alike.

This is the second post highlighting the evolution of Locus Technologies over the past 25 years. The first can be found here. This series continues with Locus at 25 Years: Locus Platform, Multitenant Architecture, the Secret of our Success.

To celebrate a milestone 25 years of success in EHS and ESG software development, we sat down with Locus President, Wes Hawthorne for a brief discussion. In this post, we ask him a series of questions highlighting the past, present and future of EHS and ESG.

One of the persistent challenges we’ve seen for the past 25 years is that the responsibilities of environmental professionals are continually expanding. Previously, almost all environmental work was localized, with facility-level permits for air, water, waste, etc. That has expanded over the years to include new regulations and reporting requirements for sustainability, social metrics, and other new compliance areas, while the old facility-level programs still continue. This has led to more pressure on environmental managers to keep up with these programs, and increased reliance on tools to manage that information. That’s where Locus has always focused our effort, to make that ever-expanding workload more manageable with modern solutions.

The current flood of interest in ESG is certainly notable as far as bringing corporate attention to the environmental field, as well as having requirements originate from the SEC here in the US. We have become accustomed to managing oversight from multiple regulatory bodies at the local, state, and federal level, but SEC would be a newcomer in our line of work. Their involvement will be accompanied by a range of new requirements that are common for the financial world, but would be unfamiliar to environmental staff.

Across other EHS fields, we are seeing increased demand for transparency in EHS functions. Overall, this is a positive move, as it brings more attention to EHS issues and develops a better EHS culture within organizations. But this also drives the need for better tools to make EHS information readily available across all levels of the organization.

As far as technologies, the ones most likely to have significant impact in the environmental field are ones that don’t require a significant capital investment. Although there are definitely some practical advantages to installing smart monitoring devices and other new technologies, procuring the funding for those purchases is often difficult for environmental professionals. Fortunately, there are still many technologies that have already been implemented successfully in other fields, but only need to be adapted for environmental purposes. Even simple changes like using web-based software in place of spreadsheets can have a huge impact on efficiency. And we haven’t yet seen the full impact of the proliferation of mobile devices on EHS functions. We are still working on new ways to take advantage of mobile devices for data collection, analysis, and communication purposes.

We’ve seen a number of innovation milestones in the past 25 years, and while we didn’t invent SaaS, we’ve been largely responsible for adapting it and perfecting it for environmental purposes. One of the major innovations we’ve integrated into our products include online GIS tools where users can easily visualize their environmental data on maps without expensive desktop software. Another one was our fully configurable software platform with built-in form, workflow, and report builders tailored for environmental purposes, which allows anyone to build and deploy environmental software applications that exactly match their needs. There have been many other innovations we’ve incorporated into our software, but these two stand out as the most impactful.

More and more, we are seeing all types of reporting being converted into pure data exchanges. Reports that used to include regulatory forms and text interpretations are being replaced with text or XML file submittals. This transition is being driven largely by availability of technology for EHS professionals to generate and read these files, but it is also promoted by regulatory agencies and other stakeholders receiving these reports. Stakeholders have less time to read volumes of interpretive text, and are becoming more skeptical of potential bias in how facts are presented in text. These are driving the need for more pure data exchanges, with increasing emphasis on quantifiable metrics. These types of reports are also more readily compared against regulatory or industry standards. For reporters, lengthy corporate reports with volumes of text and graphics are becoming less common, and the success of an organization’s programs will be increasingly reliant on robust data sets, since ultimately only the data will be reported.

There are actually a few that immediately come to mind. One reason is the nature of our continually evolving products. By providing our solutions as SaaS, our software adapts with new environmental requirements, and with new technologies. If our software was still the same as it was 25 years ago, it simply wouldn’t be sufficient for today’s requirements. Since our software is updated multiple times each year, it is difficult to notice the incremental changes, but they can be readily seen if you compare today’s software with the original in 1997. And we’re committed to continuing the development of our products as environmental needs change.

The other primary reason for our success is our excellent staff and the environmental expertise we bring to our customers. We simply could not provide the same level of support without our team of environmental engineers, scientists, geologists, chemists, and an array of others. Having that real-world understanding of environmental topics is how we’ve maintained customer relationships for multiple decades. And our software only has value because it is maintained and operated by staff who appreciate the complexity and importance of environmental work.

Mr. Hawthorne has been with Locus since 1999, working on development and implementation of services and solutions in the areas of environmental compliance, remediation, and sustainability. As President, he currently leads the overall product development and operations of the company. As a seasoned environmental and engineering executive, Hawthorne incorporates innovative analytical tools and methods to develop strategies for customers for portfolio analysis, project implementation, and management. His comprehensive knowledge of technical and environmental compliance best practices and laws enable him to create customized, cost-effective and customer-focused solutions for the specialized needs of each customer.

Mr. Hawthorne has been with Locus since 1999, working on development and implementation of services and solutions in the areas of environmental compliance, remediation, and sustainability. As President, he currently leads the overall product development and operations of the company. As a seasoned environmental and engineering executive, Hawthorne incorporates innovative analytical tools and methods to develop strategies for customers for portfolio analysis, project implementation, and management. His comprehensive knowledge of technical and environmental compliance best practices and laws enable him to create customized, cost-effective and customer-focused solutions for the specialized needs of each customer.

Mr. Hawthorne holds an M.S. in Environmental Engineering from Stanford University and B.S. degrees in Geology and Geological Engineering from Purdue University. He is registered both as a Professional Engineer and Professional Geologist, and is also accredited as Lead Verifier for the Greenhouse Gas Emissions and Low Carbon Fuel Standard programs by the California Air Resources Board.

There are two promising technologies that are about to change how we aggregate and manage EHS+S data: artificial intelligence (AI) and blockchain. When it comes to technology, history has consistently shown that the cost will always decrease, and its impact will increase over time. We still lack access to enough global information to allow AI to make a significant dent in global greenhouse gas (GHG) emissions by merely providing better tools for emissions management. For example, the vast majority of energy consumption is wasted on water treatment and movement. AI can help optimize both. Along the way, water quality management becomes an add-on app.

AI is a collective term for technologies that can sense their environment, think, learn, and act in response to what they’re detecting and their objectives. Possible applications include (1) Automation of routine tasks like sampling and analyses of water samples, (2) Segregation of waste disposal streams based on the waste containers content, (3) Augmentation of human decision-making, and (4) Automation of water treatment systems. AI systems can greatly aid the process of discovery – processing and analyzing vast amounts of data for the purposes of spotting and acting on patterns, skills that are difficult for humans to match. AI can be harnessed in a wide range of EHS compliance activities and situations to contribute to managing environmental impacts and climate change. Some examples of applications include permit interpretation and response to regulatory agencies, precision sampling, predicting natural attenuation of chemicals in water or air, managing sustainable supply chains, automating environmental monitoring and enforcement, and enhanced sampling and analysis based on real-time weather forecasts. Applying AI in water resource prediction, management, and monitoring can help to ameliorate the global water crisis by reducing or eliminating waste, as well as lowering costs and lessening environmental impacts. A similar analogy holds for air emissions management.

The onset of blockchain technology will have an even bigger impact. It will first liberate data and, second, it will decentralize monitoring while simultaneously centralizing emissions management. It may sound contradictory, but we need to decentralize in order to centralize management and aggregate relevant data across corporations and governmental organizations without jeopardizing anyone’s privacy. That is the power of blockchain technology. Blockchain technology will eliminate the need for costly synchronization among stakeholders: corporations, regulators, consultants, labs, and the public. What we need is secure and easy access to any data with infinite scalability. It is inevitable that blockchain technology will become more accessible with reduced infrastructure over the next few decades. My use of reduced architecture here refers to a replacement of massive centralized databases controlled by one of the big four internet companies using the hub-and-spoke model concept with a device-to-device communication with no intermediaries.

This post was originally published in Environmental Business Journal in June of 2020.

The Locus Technologies ESG Survey Tool enables users to email surveys and questionnaires directly from Locus to their supply chain. This is achieved without having to create usernames and credentials those receiving surveys.

When surveys are issued, the tool generates a secure link to each email recipient. Email recipients click the link, respond to the survey or questionnaire (without having to create a Locus username/password), and the data will be captured within Locus software for ESG purposes. Recipients of the link only receive access to their survey form, and nothing else in the system, and the links expire within a prescribed timeframe to further strengthen security.

The survey tool securely streamlines data collection from external entities who would traditionally never be given access to the system, including suppliers, vendors, sales channels and consultants. Once collected, the data can be immediately be used for ESG calculations and reporting.

In a Software as a Service (SaaS) delivery model, service uptime is vital for several reasons. Besides the obvious of having access to the service over the internet at any given time and staying connected to it 24/7/365, there are additional reasons why service uptime is essential. One of them is quickly verifying the vendor’s software architecture and how it fits the web.

Locus is committed to achieving and maintaining the trust of our customers. Integral to this mission is providing a robust compliance program that carefully considers data protection matters across our cloud services and service uptime. After security, service uptime and multitenancy at Locus come as a standard and, for the last 25 years, have been the three most essential pillars for delivering our cloud software. Our real-time status monitoring (ran by an independent provider of web monitoring services) provides transparency around service availability and performance for Locus’ ESG and EHS compliance SaaS products. Earlier I discussed the importance of multitenancy in detail. In this article, I will cover the importance of service uptime as one measure to determine if the software vendor is running genuine multitenant software or not.

If your software vendor cannot share uptime statistics across all customers in real-time, they most likely do not run on a multitenant SaaS platform. One of the benefits of SaaS multitenancy (that is frequently overlooked during the customer software selection process) is that all customers are on the same instance and version of the software at all times. For that reason, there is no versioning of software applications. Did you ever see a version number for Google’s or Amazon’s software? Yet they serve millions of users simultaneously and constantly get upgraded. This is because multitenant software typically provides a rolling upgrade program: incremental and continuous improvements. It is an entirely new architectural approach to software delivery and maintenance model that frees customers from the tyranny of frequent and costly upgrades and upsell from greedy vendors. Companies have to develop applications from the ground up for multitenancy, and the good thing is that they cannot fake it. Let’s take a deeper dive into multitenancy.

An actual multitenant software provider can publish its software uptime across all customers in real-time. Locus, for example, has been publishing its service uptime in real-time across all customers since 2009. Locus’s track record speaks for itself: Locus Platform and EIM have a proven 99.9+ percent uptime record for years. To ensure maximum uptime and continuous availability, Locus provides redundant data protection and the most advanced facilities protection available, along with a complete data recovery plan. This is not possible with single-tenant applications as each customer has its software instance and probably a different version. One or a few customers may be down, others up, but one cannot generally aggregate software uptime in any meaningful way. The fastest way to find if the software vendor offers multitenant SaaS or is faking it is to check if they publish online, in real-time, their applications uptime, usually delivered via an independent third party.

Legacy client-server or single-tenant software cannot qualify for multitenancy, nor can it publish vendor’s uptime across all customers. Let’s take a look at definitions:

Single-Tenant – A single instance of the software and supporting infrastructure serves a single customer. With single-tenancy, each customer has their independent database and instance of the software. Essentially, there is no sharing happening with this option.

Multitenant – Multitenancy means that a single instance of the software and its supporting infrastructure serves multiple customers. Each customer shares the software application and also shares a single database. Each tenant’s data is isolated and remains invisible to other tenants.

A multitenant SaaS provider’s resources are focused on maintaining a single, current (and only) version of the software platform rather than being spread out in an attempt to support multiple software versions for customers. If a provider isn’t using multitenancy, it may be hosting thousands of single-tenant customer implementations. Trying to maintain that is too costly for the vendor, and sooner or later, those costs become the customers’ costs.

A vendor invested in on-premise, hosted, and hybrid models cannot commit to providing all the benefits of an actual SaaS model due to conflicting revenue models. Their resources will be spread thin, supporting multiple software versions rather than driving SaaS innovation. Additionally, suppose the vendor makes most of their revenue selling on-premise software. In that case, it is difficult for them to fully commit to a proper SaaS solution since most of their resources support the on-premise software. In summary, a vendor is either multitenant or not – there is nothing in between. If they have a single application installed on-premise of customer or single-tenant cloud, they do not qualify to be called multitenant SaaS.

Before you engage future vendors for your enterprise ESG reporting or EHS compliance software, assuming you already decided to go with a SaaS solution, ask this simple question:

Can you share your software uptime across ALL your customers in real-time? If the answer is no, pass.

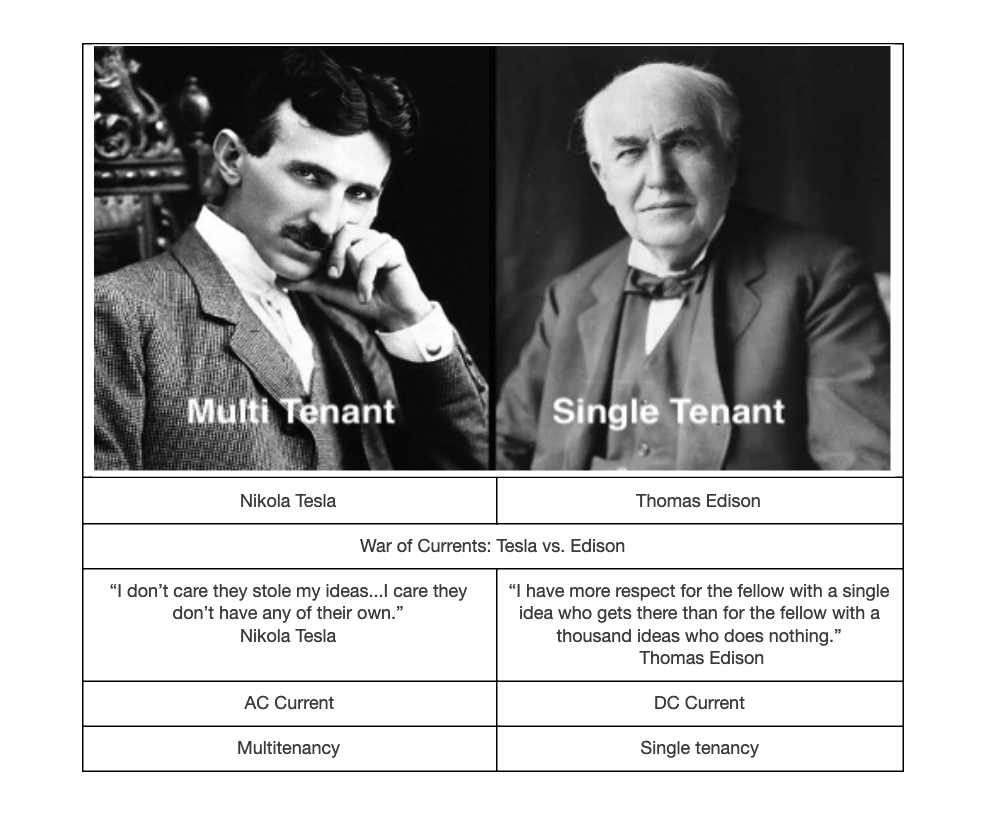

And if the vendor suddenly introduces a “multitenant” model (after selling an on-premises or single-tenant software version for 10+ years), who in the world would want to migrate to that experimental cloud without putting the contract out to bid to explore a switch to well established and market-tested actual multitenant providers? The first-mover advantage of multitenancy is a considerable advantage for any vendor. Still not convinced? Let me offer a simple analogy to drive home the point as to why service uptime and multitenancy matter: Tesla vs. Edison–War of Currents.

The War of Currents was a series of events surrounding the introduction of competing electric power transmission systems in the late 1880s and early 1890s that pitted companies against one another and involved a debate over the cost and convenience of electricity generation and distribution systems, electrical safety, and a media/propaganda campaign, with the leading players being the direct current (DC) based on the Thomas Edison Electric Light Company and the supporters of alternating current (AC) based on Nikola Tesla’s inventions backed by Westinghouse.

With electricity supplies in their infancy, much depended on choosing the right technology to power homes and businesses across the country. The Edison-led group argued for DC current that required a power generating station every few city blocks (single-tenant model). In contrast, the AC group advocated for a centralized generation with transmission lines that could move electricity great distances with minimal loss (multitenant model).

The lower cost of AC power distribution and fewer generating stations eventually prevailed. Multitenancy is equivalent to AC regarding cost, convenience, and network effect. You can read more about how this analogy relates to SaaS in the book by Nicholas Carr, “Big Switch.” It’s the best read so far about the significance of the shift to multitenant cloud computing. Unfortunately, the ESG/EHS software industry has lagged in adopting multitenancy.

Given these fundamental differences between different modes of delivering software as a service, it is clear that the future lies with the multitenant model.

Whether all customer data is in one or multiple databases is of no consequence to the customer. For those arguing against it, it is like an assertion that companies “do not want to put all their money into the same bank account as their competitors,” when what those companies are doing is putting their money into different accounts at the same bank.

When customers of a financial institution share what does not need to be partitioned—for example, the transactional logic and the database maintenance tools, security, and physical infrastructure and insurance offered by a major financial institution—then they enjoy advantages of security, capacity, consistency, and reliability that would not be affordably deliverable in isolated parallel systems.

Locus has implemented procedures designed to ensure that customer data is processed only as instructed by the customer throughout the entire chain of processing activities by Locus and its subprocessors. Amazon Web Services, Inc. (“AWS”) provides the infrastructure used by Locus to host or process customer data. Locus hosts its SaaS on AWS using a multitenant architecture designed to segregate and restrict customer data access based on business needs. The architecture provides an effective logical data separation for different customers via customer-specific “Organization IDs” and allows customer and user role-based access privileges. The customer interaction with Locus services is operated in an architecture providing logical data separation for different customers via customer-specific accounts. Additional data segregation ensures separate environments for various functions, especially testing and production.

Multitenancy yields a compelling combination of efficiency and capability in enterprise cloud applications and cloud application platforms without sacrificing flexibility or governance.

Let’s take a look back on the most exciting new features and changes made in Locus Platform during 2019!

[sc_icon_with_text icon=”tasks” icon_shape=”circle” icon_color=”#ffffff” icon_background_color=”#52a6ea” icon_size=”big” level=”h3″]

Two additional types of task periodicity have been added: Triggered tasks, which allow the automatic creation of a Task based on the creation of a triggering event (e.g., a spill or storm event), and Sequenced tasks, which allow the creation of a series of tasks in a designated order. Learn more about our compliance and task management here.[/sc_icon_with_text]

[sc_icon_with_text icon=”mobile” icon_shape=”circle” icon_color=”#ffffff” icon_background_color=”#9ac63f” icon_size=”big” level=”h3″]

Users can now create a mobile version of any data input form. Every form in the desktop platform can be mobile-enabled, so you can introduce new ways of streamlining data collection to your team.[/sc_icon_with_text]

[sc_icon_with_text icon=”workflow” icon_shape=”circle” icon_color=”#ffffff” icon_background_color=”#3766b5″ icon_size=”big” level=”h3″]

‘Process Flows’ have been added, which guide users in completing processes following a simple step-by-step interface.[/sc_icon_with_text]

[sc_icon_with_text icon=”facility” icon_shape=”circle” icon_color=”#ffffff” icon_background_color=”#52a6ea” icon_size=”big” level=”h3″]

Our expanded Facilities Management App is designed to map at the enterprise level showing all locations, navigate your facilities hierarchy to review information and quickly take action at every level. Locus Facilities is a comprehensive facility management application that aims to increase the efficiency of customer operations and centralize important company information.[/sc_icon_with_text]

[sc_icon_with_text icon=”settings–configuration” icon_shape=”circle” icon_color=”#ffffff” icon_background_color=”#9ac63f” icon_size=”big” level=”h3″]

Users can choose from existing portlets (found on the dashboard pages) to customize their landing page to their unique needs. Create custom dashboards to highlight exactly the information you want in any format (charts, maps, tables, tree maps, diagrams, and more).[/sc_icon_with_text]

[sc_icon_with_text icon=”email–contact” icon_shape=”circle” icon_color=”#ffffff” icon_background_color=”#3766b5″ icon_size=”big” level=”h3″]

Add notes to any record by sending an email directly into the system. Allows anyone to add or append to a record in the system simply through email.[/sc_icon_with_text]

In the final part of our 4-part blog series, find out how cloud environmental databases enable better data stewardship and quality assurance.

299 Fairchild Drive

Mountain View, CA 94043

P: +1 (650) 960-1640

F: +1 (415) 360-5889

Locus Technologies provides cloud-based environmental software and mobile solutions for EHS, sustainability management, GHG reporting, water quality management, risk management, and analytical, geologic, and ecologic environmental data management.