Oil companies agree to reduce methane emissions

A coalition of the world’s oil companies agreed to reduce methane emissions from natural gas extraction—part of an effort to shore up the climate credentials of the hydrocarbon.

The Oil and Gas Climate Initiative said it would target reducing methane emissions to less than 0.25% of the total natural gas the group of 13 member companies produces by 2025.

Methane is the main component of natural gas. During extraction, transport, and processing, it often leaks into the environment. Methane is a much more potent greenhouse gas than CO2. In the short term, it traps more heat although it stays shorter in the atmosphere. According to the International Energy Agency, one ton of methane is equivalent to as much as 87 tons of carbon dioxide over a 20-year time frame.

Natural gas production is growing. Many big oil companies are increasing production of natural gas to offset higher emissions from other hydrocarbon and coal sources. The switch makes the oil-and-gas industry look better when demonstrating emission reduction to limit climate change.

For that reason, some oil companies, Shell, in particular, has tilted its production mix toward more gas output.

According to 2018 report by the Environmental Defense Fund, a nonprofit environmental advocacy group, as much as $34 billion of global gas supply is lost each year through leaks and venting. That is another valid reason to limit those methane escapes and park the proceeds to the bottom line. That in itself could fund part of the effort to stop or reduce the leaks.

Tips for choosing a GIS application for your environmental database

You can turbocharge your water data management by including a geographical information system (GIS) in your toolkit! Your data analysis efficiency also gets a huge boost if your data management system includes a GIS system “out of the box” because you won’t have to manually transfer data to your GIS. All your data is seamlessly available in both systems.

Not all GIS packages are created equal, though. Here are some tips to consider when looking at mapping applications for your environmental data:

1) Confirm that integration is built-in and thorough

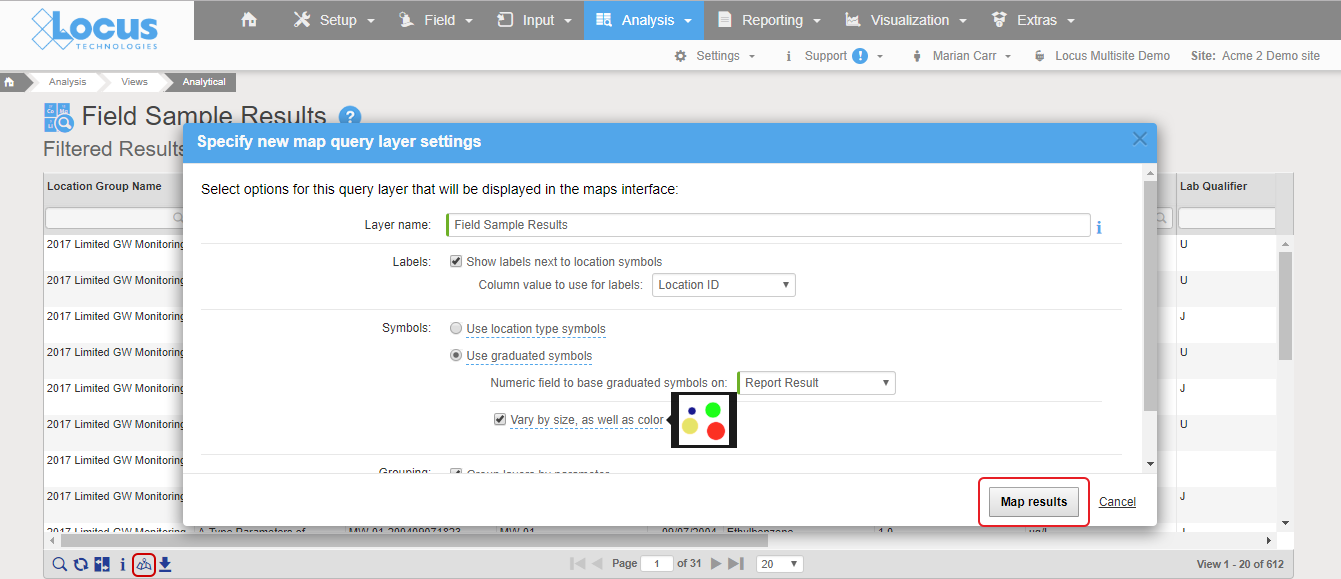

Mapping is easy when properly integrated with your environmental database. You should not need extra filters or add-on programs to visualize your data. Look for built-in availability of features, such as “click to map”, that take the guesswork and frustration out of mapping for meaningful results.

Good integration means mapping is as easy as clicking a “show on map” button. In Locus EIM, you can run a data query and click “Show results on map” icon, change the default settings if desired, and instantly launch a detailed map with a range of query layers to review all chemicals at the locations of interest.

All the query results are presented as query layers, so you can review the results in detail. This map was created with the easy “show results on map” functionality, which anyone can use with no training.

2) Check for formatting customization options

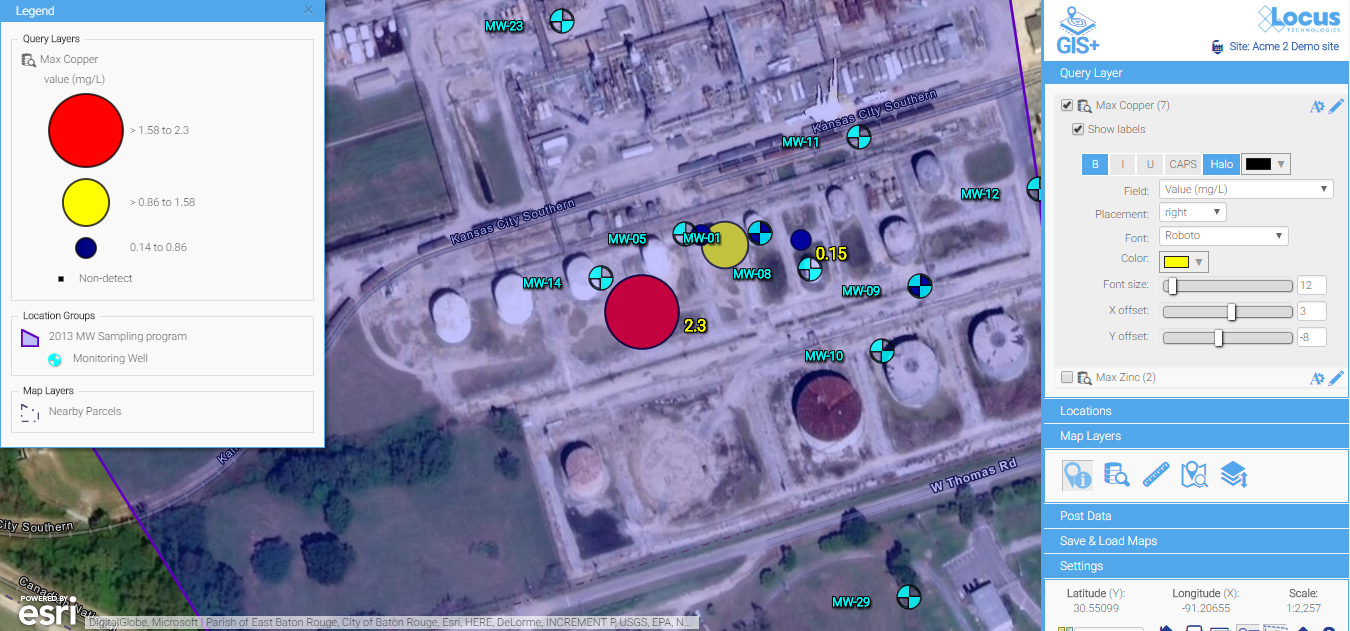

Look for easy editing tools to change the label colors, sizes, fonts, positioning, and symbols. Some map backgrounds make the default label styles hard to read and diminish the utility of the map, or if you’re displaying a large quantity of data, you’ll almost certainly need to tweak some display options to make these labels more readable.

Default label styles are legible on this background, but they are a bit hard to read.

A few simple updates to the font color, font sizes, label offset, and background color make for much easier reading. Changes are made via easy-to-use menus and are instantly updated on the map, so you have total control to make a perfectly labeled map.

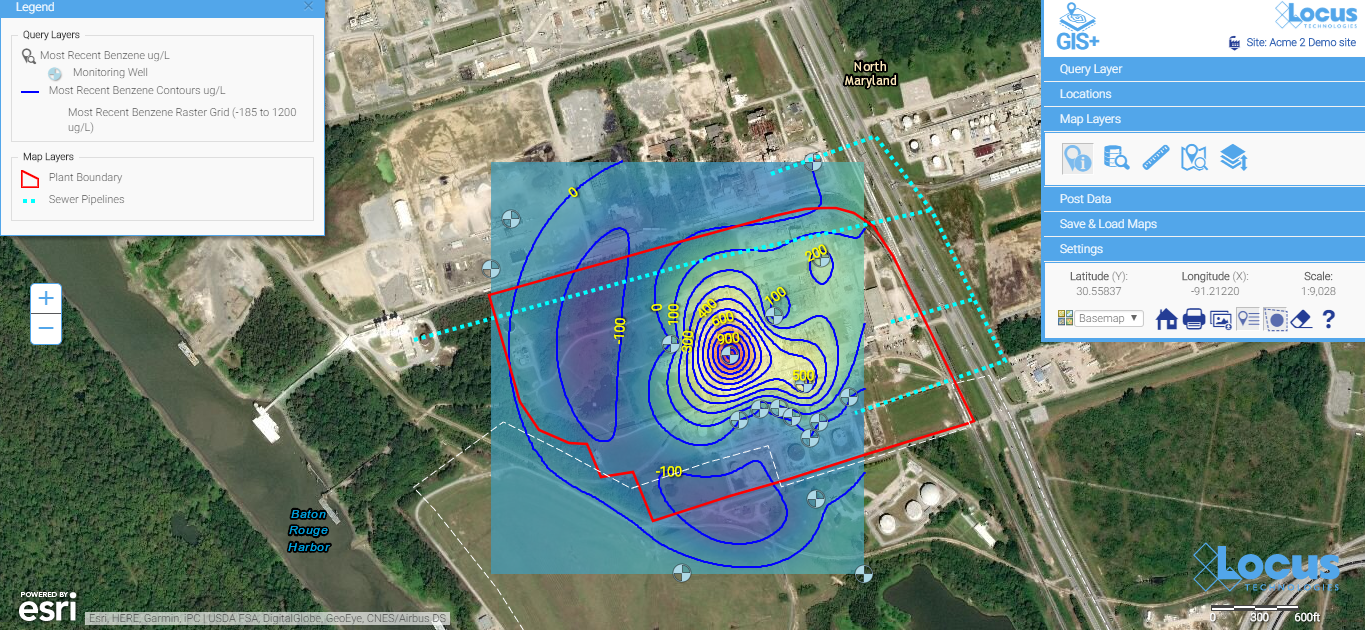

3) Look for built-in contouring for quick assessment of the extent of the spatial impact

Contours can be a great way to visually interpret the movement of contaminants in groundwater and is a powerful visualization tool. In the example below, you can clearly see the direction the plume is heading and the source of the problem. An integrated GIS with a contouring engine lets you go straight from a data query to a contour map—without export to external contouring or mapping packages. This is great for quick assessments for your project team.

Contour maps make it easy to visualize the source and extent of the plumes. They can be easily created with environmental database management systems that include basic contouring functionality.

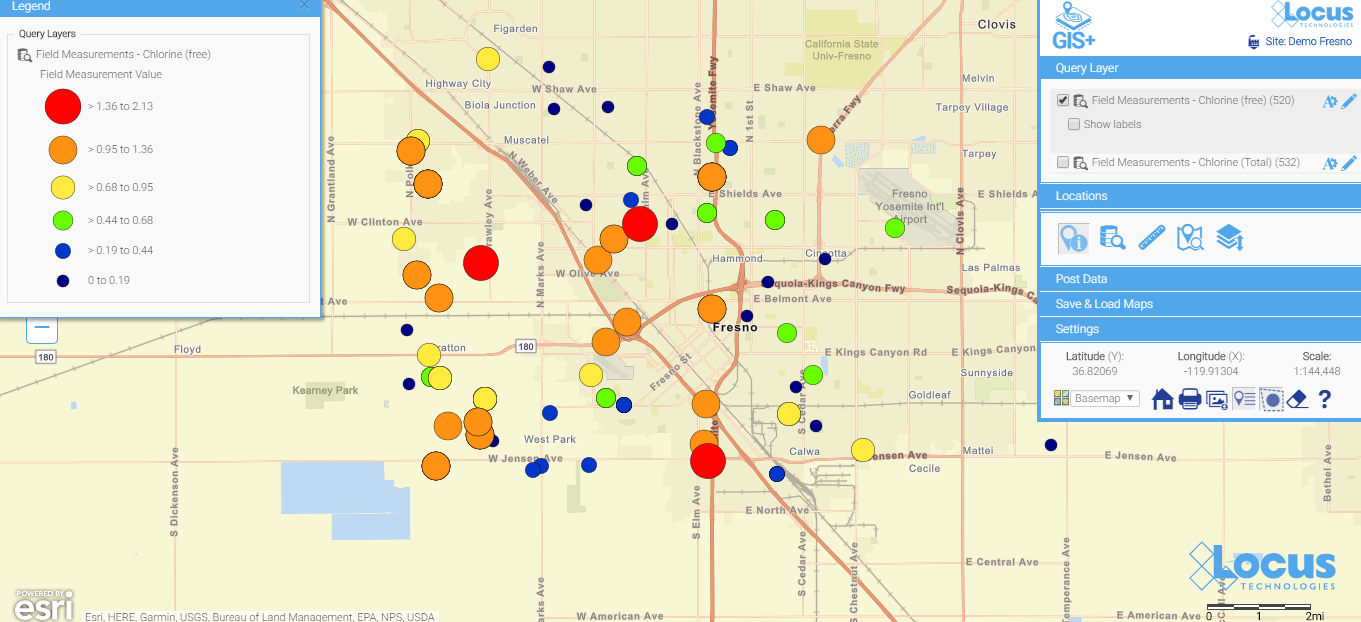

4) Look for something easy to use that doesn’t require staff with specialized mapping knowledge

Many companies use sophisticated and expensive mapping software for their needs. But the people running those systems are highly trained and often don’t have easy access to your environmental data. For routine data review and analysis, simple is better. Save the expensive, stand-alone GIS for wall-sized maps and complex regulatory reports.

Here is a simple map (which is saved, so anyone can run it) showing today’s chlorine data in a water distribution system. You don’t have to wait for the GIS department to create a map when you use a GIS that’s integrated with your environmental database system. When data are updated daily from field readings, these maps can be incredibly helpful for operational personnel.

See your data in new ways with Locus GIS for environmental management.

See your data in new ways with Locus GIS for environmental management.Locus offers integrated GIS/environmental data management solutions for organizations in many industries.

Find out more >

Taking the next steps

After viewing some of the many visualization possibilities in this blog, the next step is make some maps happen!

- Make sure your environmental data system has integrated mapping options.

- Make sure your sampling/evaluation/monitoring locations have a consistent set of coordinates. If you have a mixed bag of coordinate systems, you will need to standardize. Otherwise, your maps will not be meaningful. Here are some options to try, as well as some good resource sites:

- https://epsg.io/ (transformations)

- http://www.earthpoint.us/Convert.aspx (transformations)

- Start with a few easy maps—and build from there.

Happy mapping!

Locus EIM SaaS environmental software selected by Hudbay Minerals

MOUNTAIN VIEW, Calif., 19 June 2018 — Locus Technologies (Locus), the industry leader in multi-tenant SaaS EHS and environmental management software, is pleased to announce that Hudbay Minerals, a premier mining company in Canada, will use Locus EIM to improve their environmental data management for field and analytical data reporting. In addition to the standard features of Locus EIM, Hudbay Minerals is opting to use Locus’ GIS+ mapping solution, Locus Mobile for iOS, and the robust LocusDocs document management solution to enhance and streamline their processes.

Locus EIM and the integrated GIS+ solution will help Hudbay Minerals to improve efficiency of sampling and monitoring activities for both field and analytical data. The SaaS solution is enhanced by Locus Mobile for field data collection, which works offline without any internet connection.

“The Hudbay team in Arizona looks forward to working with the Locus team and using the system,” said Andre Lauzon, Vice President, Arizona Business Unit at Hudbay.

“By using the powerful smart mapping technology of Locus GIS+, powered by Esri and integrated with all the functionalities of Locus EIM, Hudbay Minerals can save data queries as map layers to create more impactful visual reports,” said Wes Hawthorne, president of Locus Technologies.

Shape of Water: Cape Town running out of drinking water

The city cut daily water use limits first to 87 liters and then 50 in a bid to avert shutting off supplies.

The city had set a 50-liter daily limit and had told citizens “Day Zero” was approaching when people would have to queue at standpipes.

But water-saving efforts in the South African city have seen the day pushed back from April to 27 August. Seasonal rains should mean that date is now averted, the city said. The shortages follow three years of low rainfall. The city had resorted to increasingly drastic measures to clamp down on water usage, including “naming and shaming” the 100 addresses using the most water and fining residents who failed to comply with the 50 liters (13 gallons) limit per person.

By comparison, the average California consumer uses some 322 liters (85 gallons) of water per day. Water use in California was highest in the summer months of June through September, where it averaged 412 liters per person per day. By comparison, during the cooler and wetter months of January through March of 2016, average per capita water use was only 242 liters per person per day.

Although the risk that piped water supplies will be shut off this year has receded, politicians and environmentalists warn that the water crisis is there to stay in Cape Town, as year-on-year rainfall levels dwindle.

Shipping industry to discuss cuts in CO2 emissions

International shipping produces about 1,000 million tons of CO2 annually – that’s more than the entire German economy.

A meeting of the International Maritime Organisation in London that starts tomorrow will discuss how shipping industry can radically reduce its CO2 emissions. The shipping industry, if it does not change the way it operates, will contribute almost a fifth of the global total of CO2 by 2050. A group of nations led by Brazil, Saudi Arabia, India, Panama, and Argentina is resisting CO2 targets for shipping. Their submission to the meeting says capping ships’ overall emissions would restrict world trade. It might also force goods on to less efficient forms of transport. This argument is dismissed by other countries which believe shipping could benefit from a shift towards cleaner technology. European nations are proposing to shrink shipping emissions by 70-100 percent of their 2008 levels by 2050.

The problem has developed over many years. As the shipping industry is international, it evades the carbon-cutting influence of the annual UN talks on climate change, which are conducted on a national basis. Instead, the decisions have been left to the IMO; a body recently criticized for its lack of accountability and transparency. The IMO did agree on a design standard in 2011 ensuring that new ships should be 30 percent more efficient by 2025. But there is no rule to reduce emissions from the existing fleet.

The Clean Shipping Coalition, a green group focusing on ships, said shipping should conform to the agreement made in Paris to stabilize the global temperature increase as close as possible to 1.5C. The pressure is on the IMO to produce an ambitious policy. The EU has threatened that if the IMO doesn’t move far enough, the EU will take over regulating European shipping. That would see the IMO stripped of some of its authority.

Some say huge improvements in CO2 emissions from existing ships can be easily be made by obliging them to travel more slowly. They say a carbon pricing system is needed.

WM Symposia 2018 provided an excellent showcase for Locus GIS+ in LANL’s Intellus website

At the annual WM Symposia, representatives from many different DOE sites and contractors gather once a year and discuss cross-cutting technologies and approaches for managing the legacy waste from the DOE complex. This year, Locus’ customer Los Alamos National Laboratory (LANL) was the featured laboratory. During their presentation, they discussed Locus GIS+, which powers Intellus, their public-facing environmental monitoring database website.

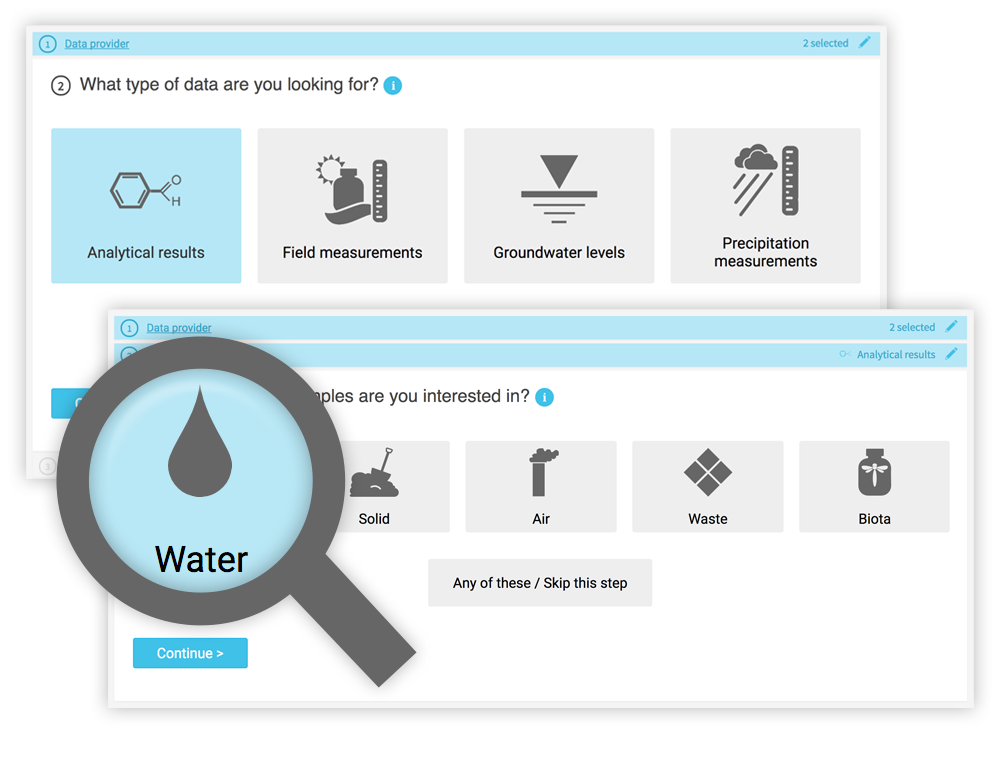

If you haven’t been to LANL’s Intellus website recently, you are in for a surprise! It was recently updated to better support casual users, and it features some of the best new tools Locus has to offer. Locus reimagined the basic query engine and created a new “Quick search” to streamline data retrieval for casual users. The guided “Quick search” simplifies data queries by stepping you through the filter selections for data sources, locations, dates, and parameters, providing context support at each step along the way.

While a knowledgeable environmental scientist may be able to easily navigate a highly technical system, that same operation is bound to be far more difficult for a layperson interested in what chemicals are in their water. Constructing the right query is not as simple as looking for a chemical in water—it really matters what type of water you want to look within. On the Intellus website (showing the environmental data from the LANL site), there are 16 different types of water (not including “water levels”). Using the latest web technologies and our domain expertise, Locus created a much easier way to get to the data of interest.

While a knowledgeable environmental scientist may be able to easily navigate a highly technical system, that same operation is bound to be far more difficult for a layperson interested in what chemicals are in their water. Constructing the right query is not as simple as looking for a chemical in water—it really matters what type of water you want to look within. On the Intellus website (showing the environmental data from the LANL site), there are 16 different types of water (not including “water levels”). Using the latest web technologies and our domain expertise, Locus created a much easier way to get to the data of interest.

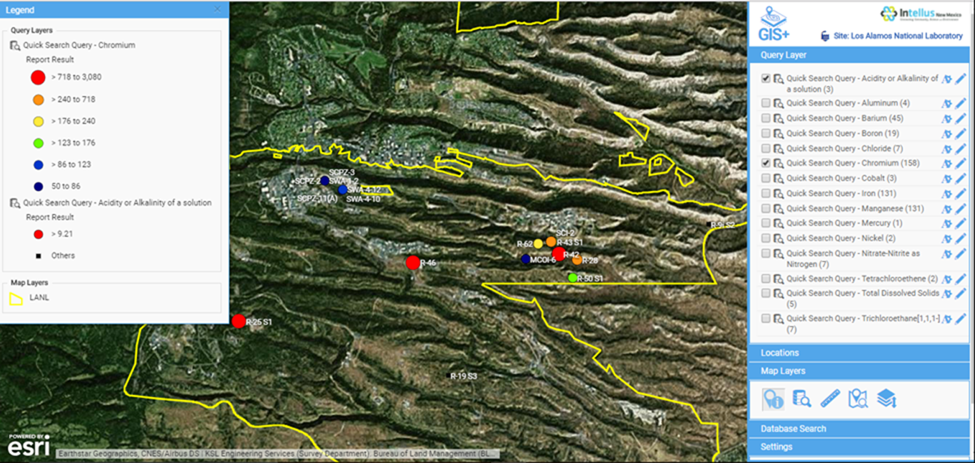

Just querying data is not necessarily the most intuitive activity to gain insights. Locus integrated our new GIS+ visualization engine to allow users to instantly see all the data they just queried in detailed, context-rich maps.

Intellus GIS+ map showing “Quick search” query results for chromium levels in the LANL area

Instead of a dense data grid, GIS+ gives users an instant visual representation of the issue, enabling them to quickly spot the source of the chemicals and review the data in the context of the environmental locations and site activities. Most importantly for Intellus users, this type of detailed map requires no GIS expertise and is automatically created based on your query. This directly supports Intellus’ mission to provide transparency into LANL’s environmental monitoring and sampling activities.

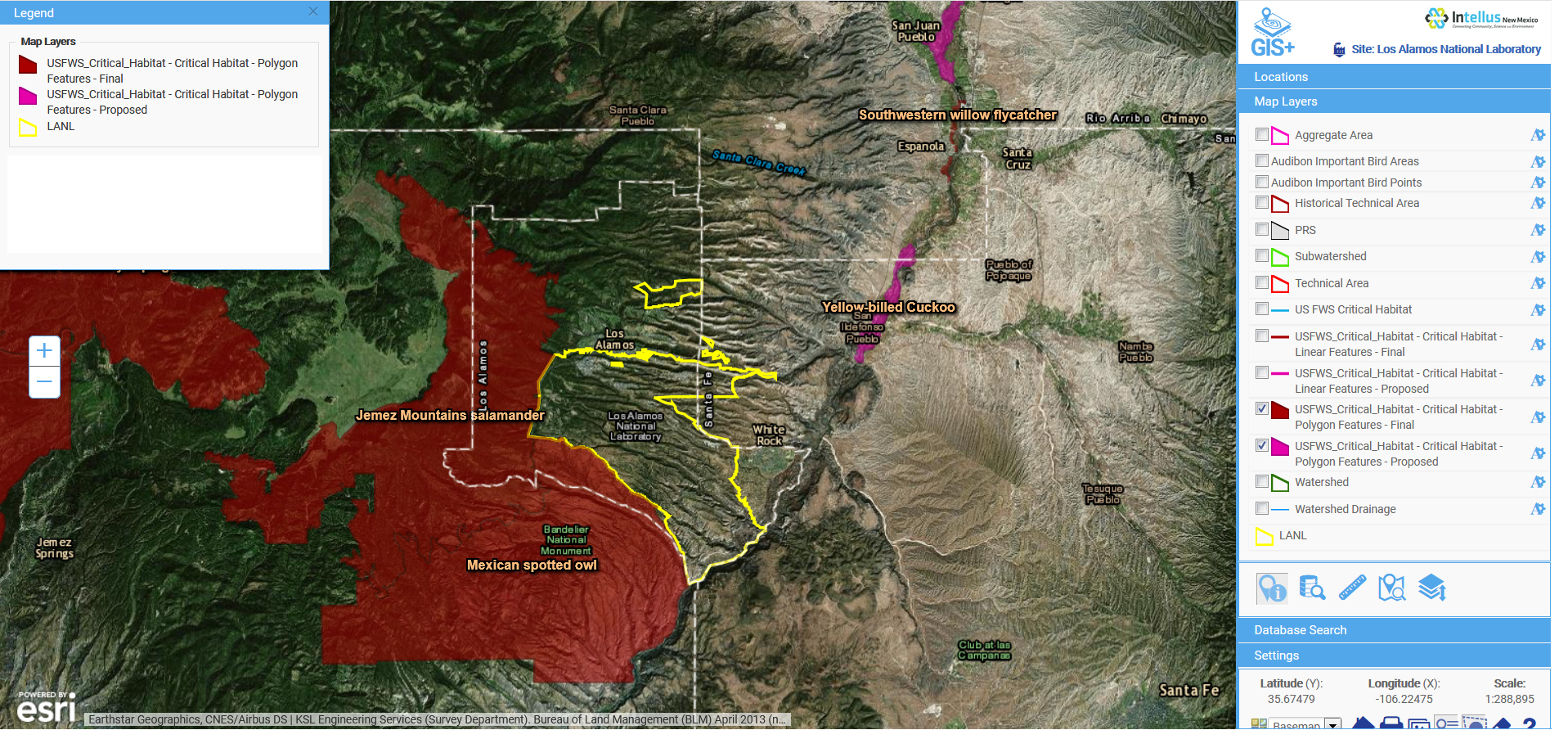

GIS+ also allows users (albeit with a bit more experience in GIS mapping) to integrate maps from a wide range of online sources to provide even more insight to the available data. In the example below, we overlaid the publicly-available US Fish and Wildlife critical habitat maps with data from the LANL site to show the relationship of the site to critical habitats. This type of sophisticated analysis is the future of online GIS. Locus takes full advantage of these opportunities to visualize and integrate data from varying sources with our GIS+ tools, made simple for users and integrated with ArcGIS Online by Esri.

Intellus GIS+ map showing imported layers of US Fish and Wildlife critical habitats in relation to LANL environmental sampling data

Overall, Locus is very proud of our cross-cutting environmental information management tools. We were one of many WM18 attendees enjoying LANL’s presentation and getting even more ideas from the audience on the next steps for better environmental visualization.

Locus Technologies announces major improvements to its Railroad Incident application

MOUNTAIN VIEW, Calif., 27 November 2017 — Locus Technologies, the industry leader in cloud-based environmental compliance and information management EHS software, announces a suite of enhancements to its Railroad Incident application within its state-of-the-art Locus Platform SaaS solution—a scalable, robust app-building platform for easily designing and deploying apps that precisely conform to an organization’s existing business processes and requirements.

Since 2013, Locus has maintained its position on the cutting edge of railroad industry EHS solutions, as one of the first Railroad Incident software providers in the market assisting U.S. Federal Railroad Administration (FRA)-regulated organizations in maintaining compliance.

The FRA imposes rigorous reporting requirements upon Class II railroads, and maintaining compliance remains difficult for many EHS departments. While railroad safety and risk mitigation are top priorities for these departments, many are finding that trying to stay up-to-date with the latest regulations while using legacy, homegrown systems to manage compliance programs is nearly impossible.

Locus found that EHS professionals who are tasked with completing many FRA forms for each incident considered it an arduous and lengthy process, requiring multiple checks to prevent errors and oversights. Keeping in line with its guiding principle of customer success, Locus developed its Railroad Incident application so that users could easily and efficiently manage and report railroad accidents and incidents, with built-in quality control checks to verify that all reporting is in compliance with FRA regulations. Locus software has successfully generated hundreds of these reports with a single-click solution that instantly completes FRA forms for direct submittal to the agency.

The Locus Platform Railroad Incident application includes:

- Easy-to-use data entry forms for incidents, with one-click incident section selection

- Ability to associate multiple injuries or illnesses to an incident

- State-of-the-art body image selector for injuries

- Dashboards to view incident trends and key metrics to aid in proactively preventing future incidents

- Cradle-to-grave root cause and corrective action analysis and tracking, easily configured to any company techniques or methods

- Custom configurable workflows and email notifications to align with existing busines processes

- Excel plugin tool for bulk importing and editing incident, employee, and other organizational data

- Mobile-enabled incident forms

- Push-button FRA Form generation to expedite form completion by automatically generating PDFs with e-signature for FRA Forms 54, 55, 55a, 57, 97, and 98

- Optional integration with third party APIs like SAP and PeopleSoft

“Locus is proud to have been supporting the railroad industry for years with this important functionality. When it comes to incident management, company managers have an easily accessible, all-encompassing view of what’s occurring across all of their different facilities, sites, and incident locations. Our easy-to-use data entry forms for railroad incidents with one-click incident section selection enables the seamless capture, analyzing, reporting, e-signing, and submittal of critical FRA-specific data—all from within our industry-tested Railroad Incident application,” said Wes Hawthorne, President of Locus Technologies. “Locus’ Railroad Incident application is a single repository in the cloud that offers railroad-specific functionality for managing incident tracking, investigations, and analyzing key safety metrics aimed at reducing accidents and mitigating risks.”

For more information about Locus’ Railroad Incident application and other EHS and sustainability solutions, visit https://www.locustec.com.

From the foundations of Rome to global carbon emissions reduction

Does the solution for over 5% of world CO2 emissions lie in the 2000-year-old concrete-making technology from ancient Rome?

Concrete is the second most consumed substance on Earth after water. Overall, humanity produces more than 10 billion tons (about 4 billion cubic meters) of concrete and cement per year. That’s about 1.3 tons for every person on the planet— more than any other material, including oil and coal. The consumption of concrete exceeds that of all other construction materials combined. The process of making modern cement and concrete has a heavy environmental penalty, being responsible for roughly 5% of global emissions of CO2.

Scientists explain ancient Rome’s long-lasting concrete

So could the greater understanding of the ancient Roman concrete mixture lead to greener building materials? That is what scientists may have discovered and published in a 2017 study, led by Marie Jackson of the University of Utah. Their study uncovered the Roman secrets for formulating some of the most long-lasting concrete yet discovered. Our ability to unlock the secrets of ancient concrete formulas is dependent upon interdisciplinary analytical approaches utilized by the Jackson heat group and could lead to further discoveries that would reduce cement-based carbon emissions.

Unlike the modern concrete mixture which erodes over time, the Roman concrete-like substance seemed to gain strength, particularly from exposure to sea water. And most importantly, the process generates fewer CO2 emissions and uses less energy and water than “modern”, Portland cement-based concrete.

Read the full article here.

Data mining gains more cachet in construction sector

Locus is mentioned in ENR’s article about data mining, discussing how Locus software helps our long-time customer, Los Alamos National Laboratory, manage their environmental compliance and monitoring.

Locus Technologies » Industries » Page 8

Locus Technologies

299 Fairchild Drive

Mountain View, CA 94043

P: +1 (650) 960-1640

F: +1 (415) 360-5889

Locus Technologies provides cloud-based environmental software and mobile solutions for EHS, sustainability management, GHG reporting, water quality management, risk management, and analytical, geologic, and ecologic environmental data management.